Amazon Personalize launches new recipes supporting larger item catalogs with lower latency

AWS Machine Learning Blog

MAY 2, 2024

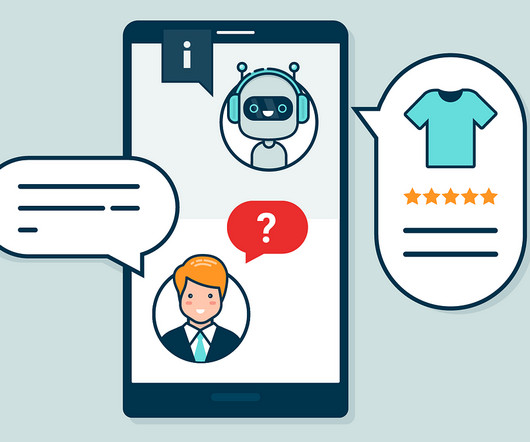

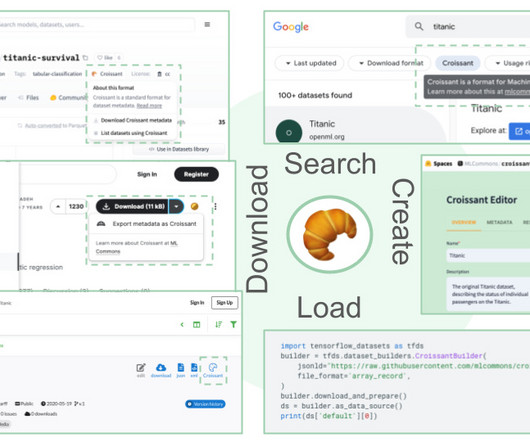

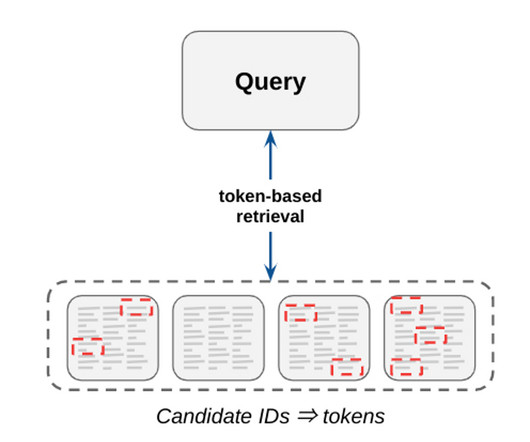

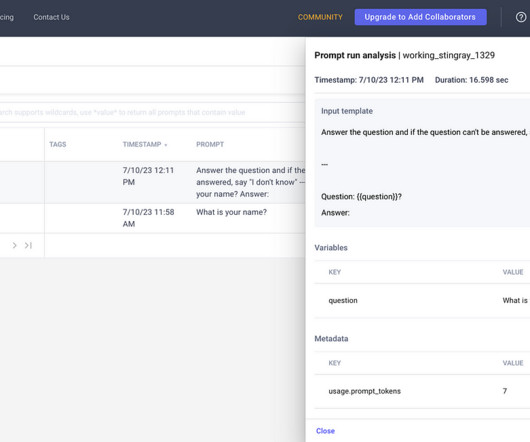

Return item metadata in inference responses – The new recipes enable item metadata by default without extra charge, allowing you to return metadata such as genres, descriptions, and availability in inference responses. If you use Amazon Personalize with generative AI, you can also feed the metadata into prompts.

Let's personalize your content