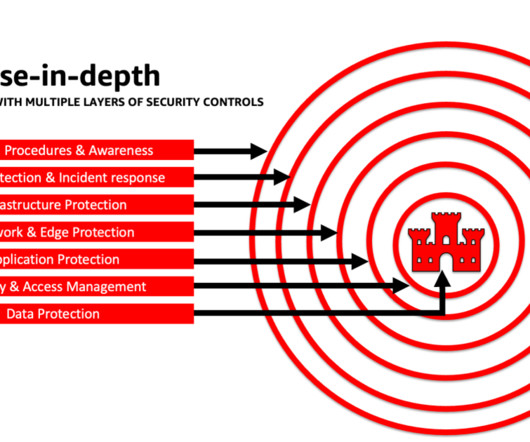

Architect defense-in-depth security for generative AI applications using the OWASP Top 10 for LLMs

AWS Machine Learning Blog

JANUARY 26, 2024

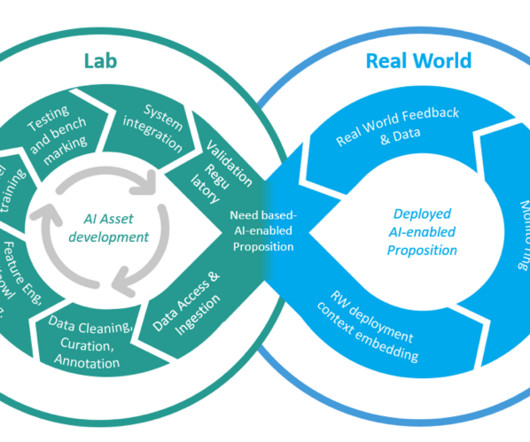

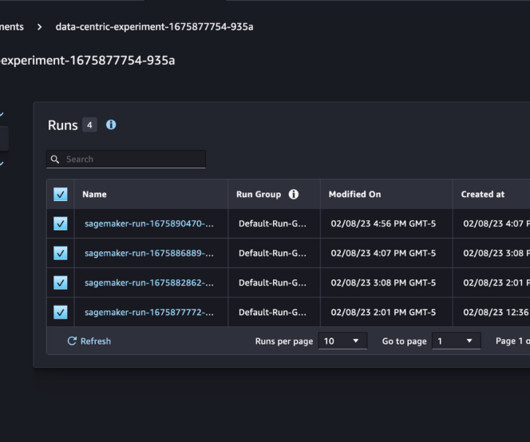

Many customers are looking for guidance on how to manage security, privacy, and compliance as they develop generative AI applications. You can also use Amazon SageMaker Model Monitor to evaluate the quality of SageMaker ML models in production, and notify you when there is drift in data quality, model quality, and feature attribution.

Let's personalize your content