How are AI Projects Different

Towards AI

AUGUST 16, 2023

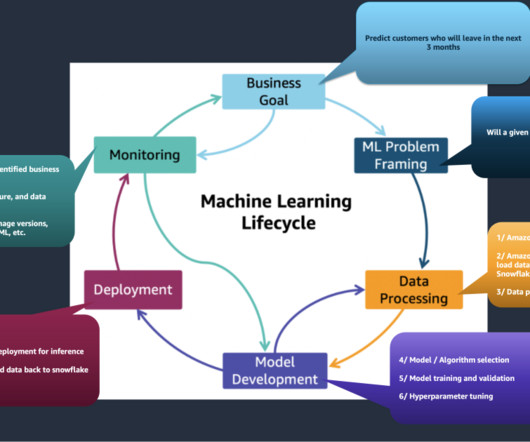

Michael Dziedzic on Unsplash I am often asked by prospective clients to explain the artificial intelligence (AI) software process, and I have recently been asked by managers with extensive software development and data science experience who wanted to implement MLOps.

Let's personalize your content