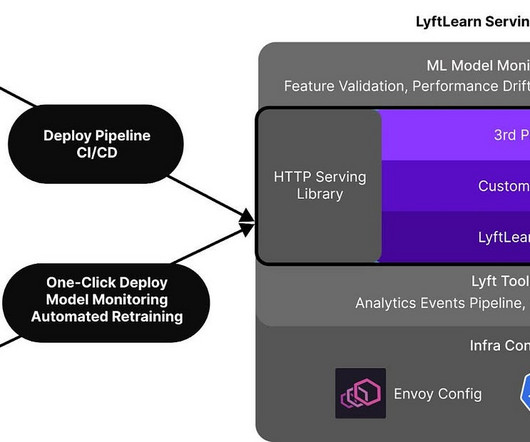

Lyft's explains their Model Serving Infrastructure

Bugra Akyildiz

MARCH 12, 2023

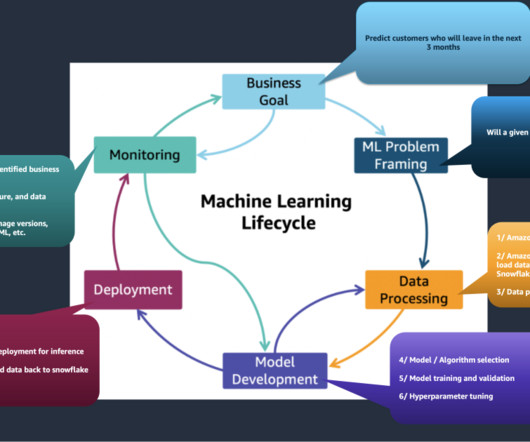

Uber wrote about how they build a data drift detection system. Make sure your vision is aligned with the power customers. It’s important to align the vision for a new system with the needs of power customers. In our case that meant prioritizing stability, performance, and flexibility above all else.

Let's personalize your content