Importance of Machine Learning Model Retraining in Production

Heartbeat

OCTOBER 30, 2023

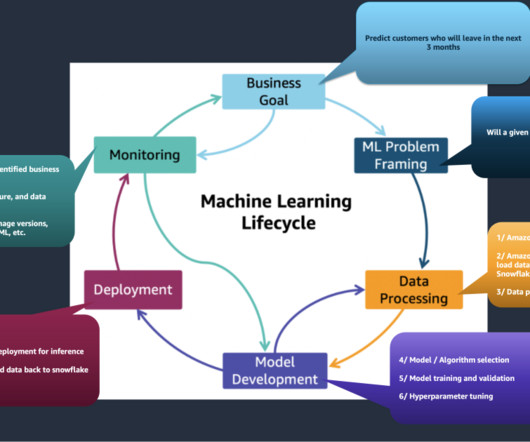

Once the best model is identified, it is usually deployed in production to make accurate predictions on real-world data (similar to the one on which the model was trained initially). Ideally, the responsibilities of the ML engineering team should be completed once the model is deployed. But this is only sometimes the case.

Let's personalize your content