How are AI Projects Different

Towards AI

AUGUST 16, 2023

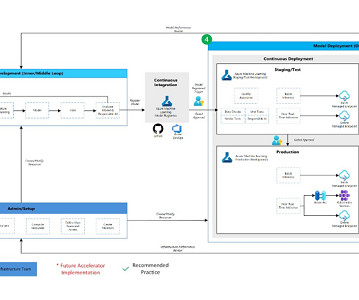

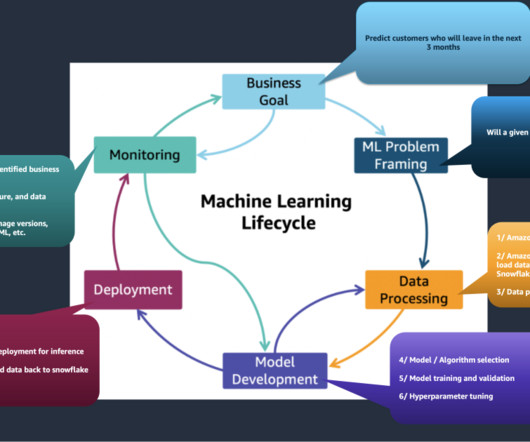

MLOps is the intersection of Machine Learning, DevOps, and Data Engineering. Monitoring Models in Production There are several types of problems that Machine Learning applications can encounter over time [4]: Data drift: sudden changes in the features values or changes in data distribution.

Let's personalize your content