AI News Weekly - Issue #380: 63% of IT and security pros believe AI will improve corporate cybersecurity - Apr 11th 2024

AI Weekly

APRIL 11, 2024

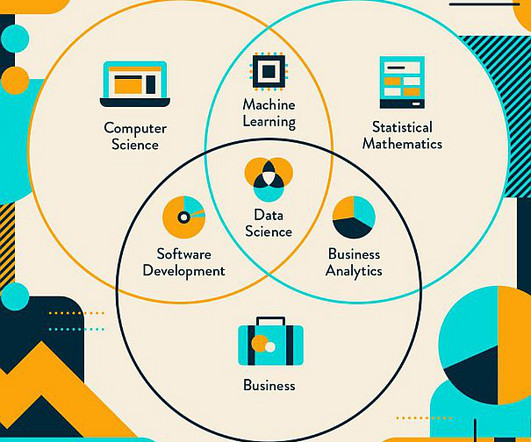

And this is particularly true for accounts payable (AP) programs, where AI, coupled with advancements in deep learning, computer vision and natural language processing (NLP), is helping drive increased efficiency, accuracy and cost savings for businesses. Generative AI is igniting a new era of innovation within the back office.

Let's personalize your content