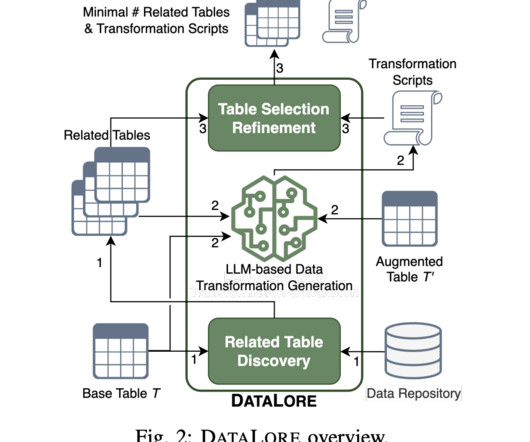

Amazon AI Introduces DataLore: A Machine Learning Framework that Explains Data Changes between an Initial Dataset and Its Augmented Version to Improve Traceability

Marktechpost

MARCH 22, 2024

DATALORE uses Large Language Models (LLMs) to reduce semantic ambiguity and manual work as a data transformation synthesis tool. Second, for each provided base table T, the researchers use data discovery algorithms to find possible related candidate tables. These models have been trained on billions of lines of code.

Let's personalize your content