Enhancing LLM Reliability: Detecting Confabulations with Semantic Entropy

Marktechpost

JUNE 22, 2024

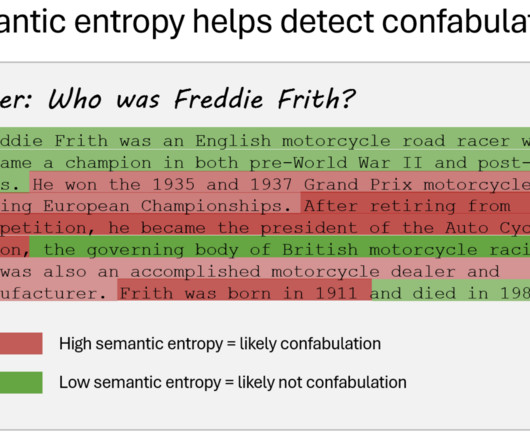

Semantic entropy is a method to detect confabulations in LLMs by measuring their uncertainty over the meaning of generated outputs. This technique leverages predictive entropy and clusters generated sequences by semantic equivalence using bidirectional entailment.

Let's personalize your content