Modular Deep Learning

Sebastian Ruder

FEBRUARY 23, 2023

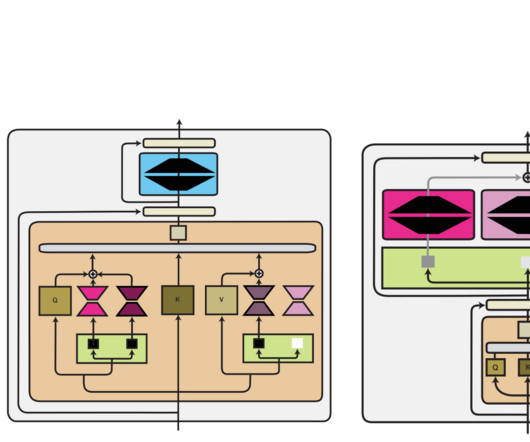

d) Hypernetwork: A small separate neural network generates modular parameters conditioned on metadata. Instead of learning module parameters directly, they can be generated using an auxiliary model (a hypernetwork) conditioned on additional information and metadata. Parameter composition. We Module parameter generation. Instead

Let's personalize your content