Enhancing LLM Reliability: Detecting Confabulations with Semantic Entropy

Marktechpost

JUNE 22, 2024

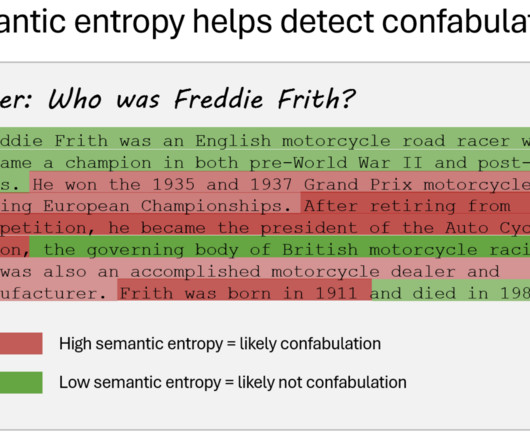

LLMs like ChatGPT and Gemini demonstrate impressive reasoning and answering capabilities but often produce “hallucinations,” meaning they generate false or unsupported information. Semantic entropy is a method to detect confabulations in LLMs by measuring their uncertainty over the meaning of generated outputs.

Let's personalize your content