Complete Beginner’s Guide to Hugging Face LLM Tools

Unite.AI

SEPTEMBER 20, 2023

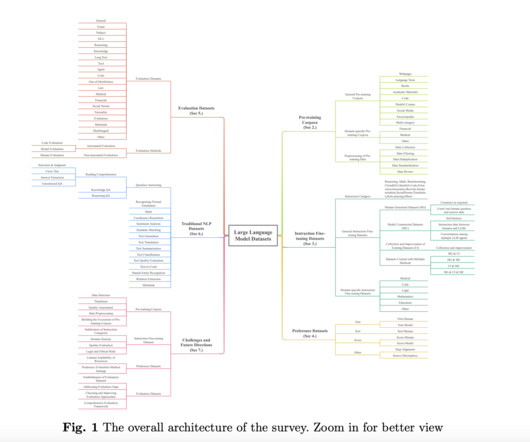

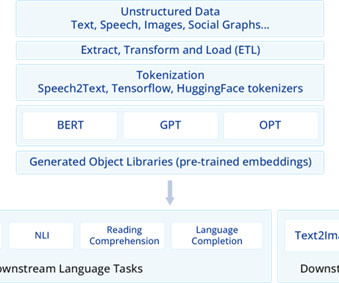

Transformers in NLP In 2017, Cornell University published an influential paper that introduced transformers. These are deep learning models used in NLP. This discovery fueled the development of large language models like ChatGPT. Hugging Face , started in 2016, aims to make NLP models accessible to everyone.

Let's personalize your content