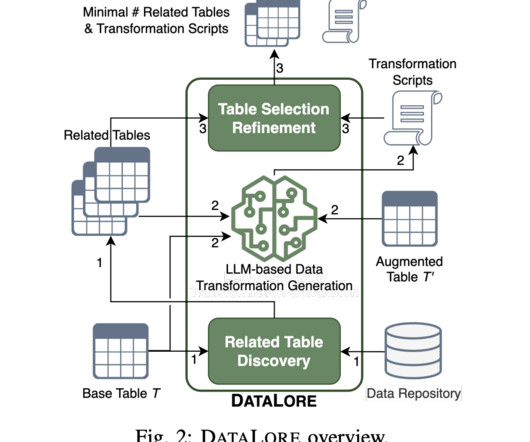

Amazon AI Introduces DataLore: A Machine Learning Framework that Explains Data Changes between an Initial Dataset and Its Augmented Version to Improve Traceability

Marktechpost

MARCH 22, 2024

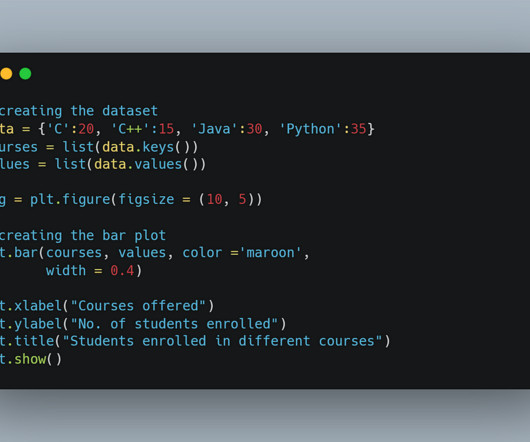

Additionally, by displaying the potential transformations between several tables, DATALORE’s LLM-based data transformation generation can substantially enhance the return results’ explainability, particularly useful for users interested in any connected table. Check out the Paper. Also, don’t forget to follow us on Twitter.

Let's personalize your content