Arize AI on How to apply and use machine learning observability

Snorkel AI

JUNE 30, 2023

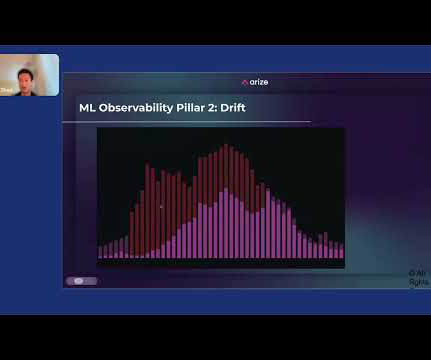

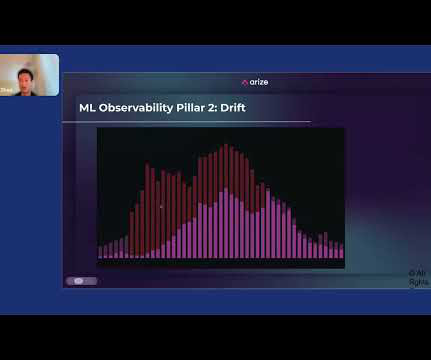

And usually what ends up happening is that some poor data scientist or ML engineer has to manually troubleshoot this in a Jupyter Notebook. So this path on the right side of the production icon is what we’re calling ML observability. We have four pillars that we use when thinking about ML observability.

Let's personalize your content