How Axfood enables accelerated machine learning throughout the organization using Amazon SageMaker

AWS Machine Learning Blog

FEBRUARY 27, 2024

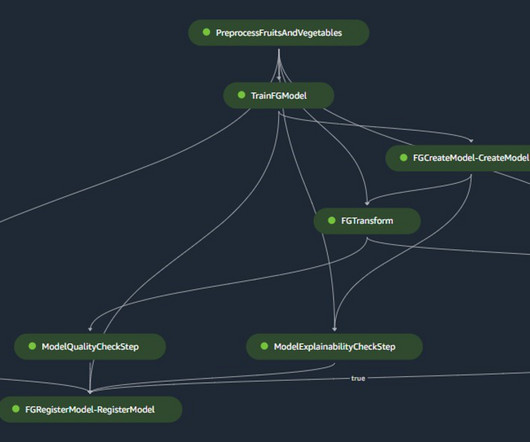

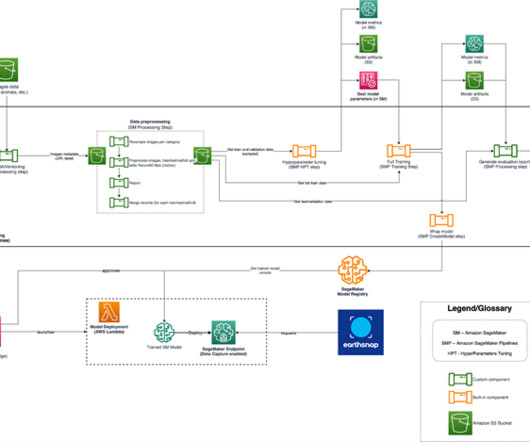

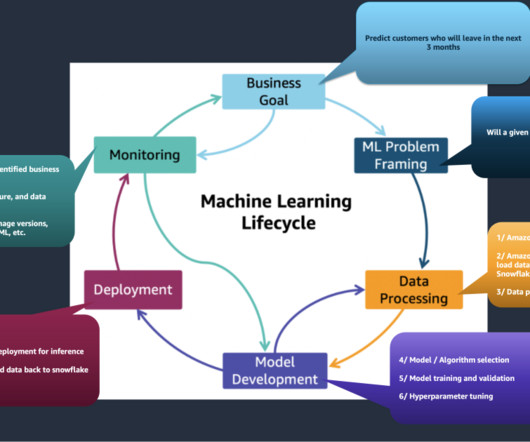

The model will be approved by designated data scientists to deploy the model for use in production. For production environments, data ingestion and trigger mechanisms are managed via a primary Airflow orchestration. Pavel Maslov is a Senior DevOps and ML engineer in the Analytic Platforms team.

Let's personalize your content