How Axfood enables accelerated machine learning throughout the organization using Amazon SageMaker

AWS Machine Learning Blog

FEBRUARY 27, 2024

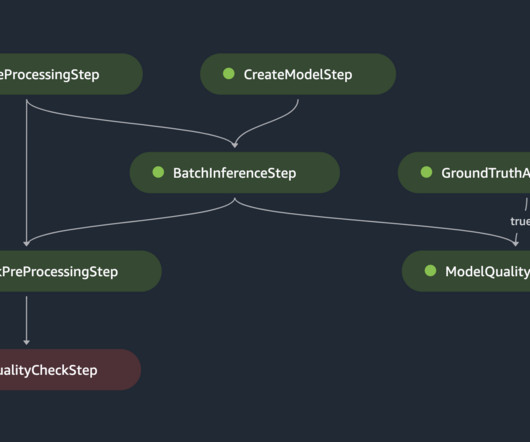

Monitoring – Continuous surveillance completes checks for drifts related to data quality, model quality, and feature attribution. Workflow A corresponds to preprocessing, data quality and feature attribution drift checks, inference, and postprocessing.

Let's personalize your content