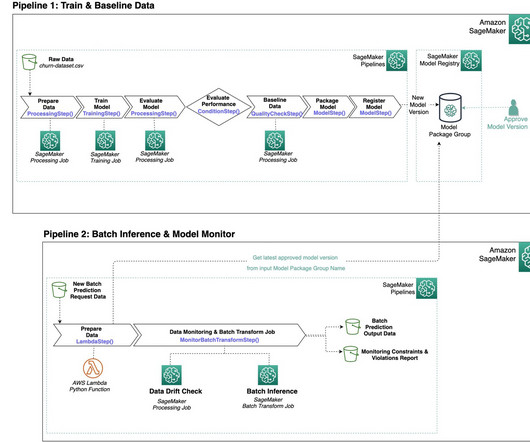

Create SageMaker Pipelines for training, consuming and monitoring your batch use cases

AWS Machine Learning Blog

APRIL 21, 2023

model.create() creates a model entity, which will be included in the custom metadata registered for this model version and later used in the second pipeline for batch inference and model monitoring. In Studio, you can choose any step to see its key metadata. large", accelerator_type="ml.eia1.medium", large", accelerator_type="ml.eia1.medium",

Let's personalize your content