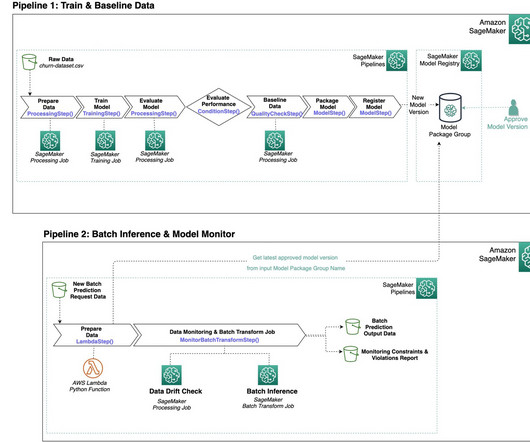

Create SageMaker Pipelines for training, consuming and monitoring your batch use cases

AWS Machine Learning Blog

APRIL 21, 2023

If the model performs acceptably according to the evaluation criteria, the pipeline continues with a step to baseline the data using a built-in SageMaker Pipelines step. For the data drift Model Monitor type, the baselining step uses a SageMaker managed container image to generate statistics and constraints based on your training data.

Let's personalize your content