10 Best Prompt Engineering Courses

Unite.AI

FEBRUARY 23, 2024

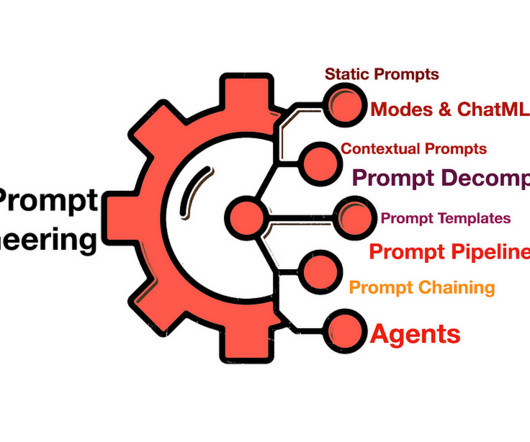

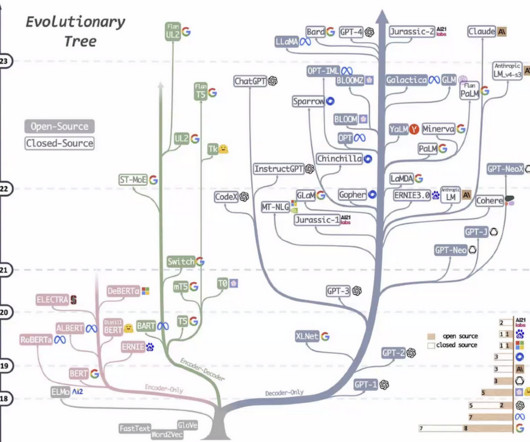

In the ever-evolving landscape of artificial intelligence, the art of prompt engineering has emerged as a pivotal skill set for professionals and enthusiasts alike. Prompt engineering, essentially, is the craft of designing inputs that guide these AI systems to produce the most accurate, relevant, and creative outputs.

Let's personalize your content