Natural Language Processing: Beyond BERT and GPT

Towards AI

SEPTEMBER 5, 2023

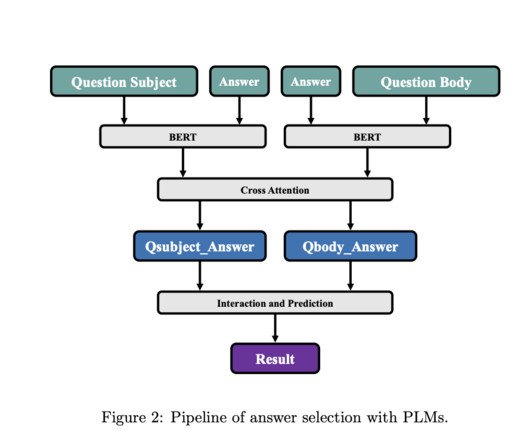

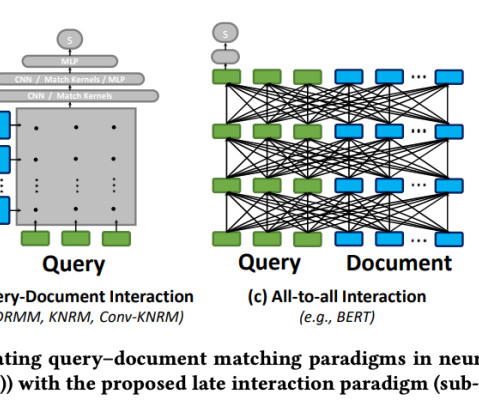

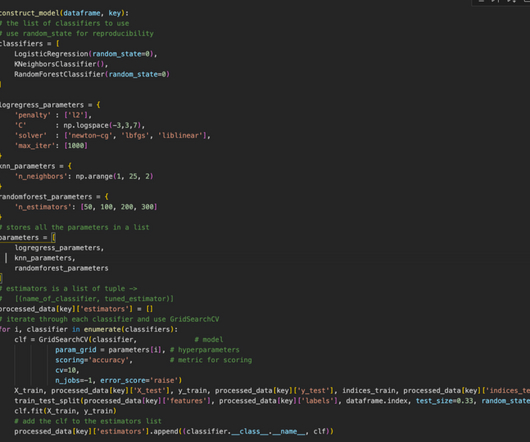

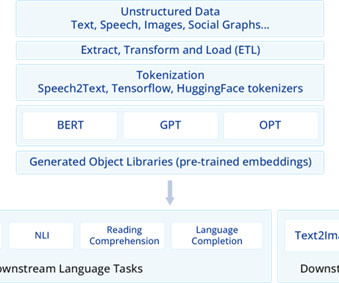

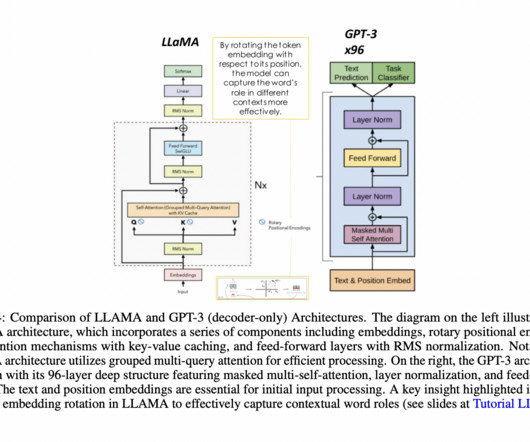

Unlocking the Future of Language: The Next Wave of NLP Innovations Photo by Joshua Hoehne on Unsplash The world of technology is ever-evolving, and one area that has seen significant advancements is Natural Language Processing (NLP). A few years back, two groundbreaking models, BERT and GPT, emerged as game-changers.

Let's personalize your content