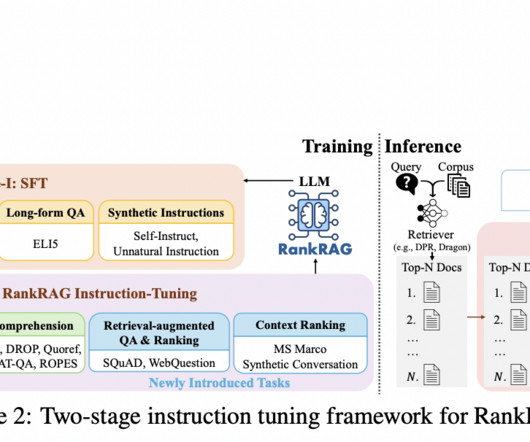

Unveiling the Future of Text Analysis: Trendy Topic Modeling with BERT

Analytics Vidhya

JULY 27, 2023

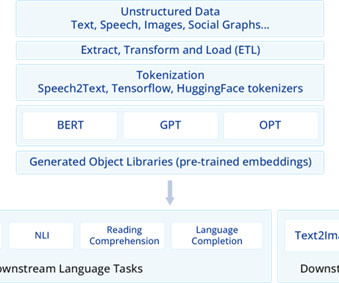

Introduction A highly effective method in machine learning and natural language processing is topic modeling. A corpus of text is an example of a collection of documents. This technique involves finding abstract subjects that appear there.

Let's personalize your content