Training Improved Text Embeddings with Large Language Models

Unite.AI

JANUARY 11, 2024

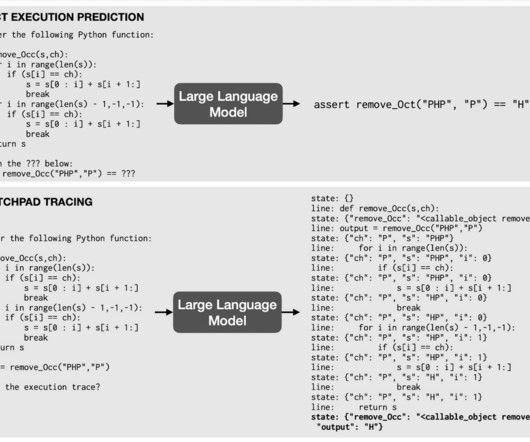

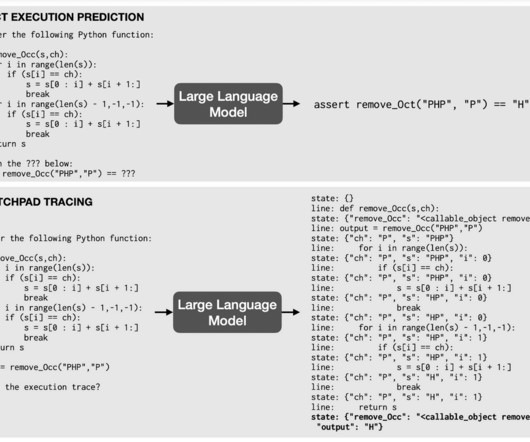

More recent methods based on pre-trained language models like BERT obtain much better context-aware embeddings. Existing methods predominantly use smaller BERT-style architectures as the backbone model. For model training, they opted for fine-tuning the open-source 7B parameter Mistral model instead of smaller BERT-style architectures.

Let's personalize your content