All Languages Are NOT Created (Tokenized) Equal

Topbots

JUNE 15, 2023

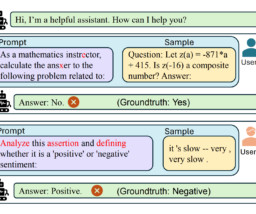

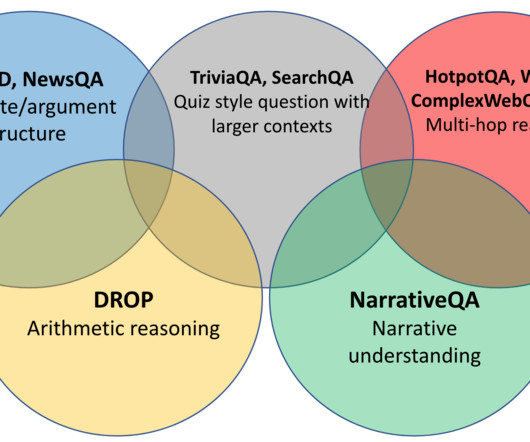

70% of research papers published in a computational linguistics conference only evaluated English.[ In Findings of the Association for Computational Linguistics: ACL 2022 , pages 2340–2354, Dublin, Ireland. Association for Computational Linguistics. Are All Languages Created Equal in Multilingual BERT?

Let's personalize your content