Decoding Emotions: Sentiment Analysis with BERT

Towards AI

OCTOBER 29, 2024

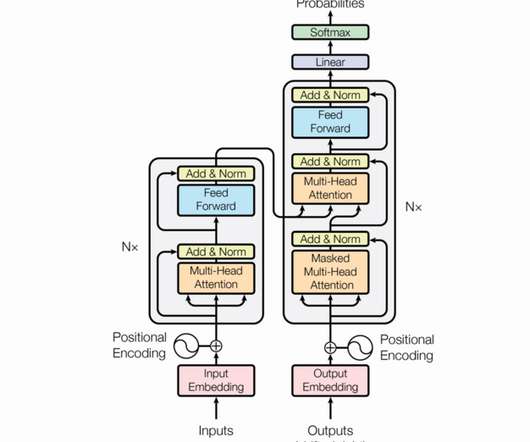

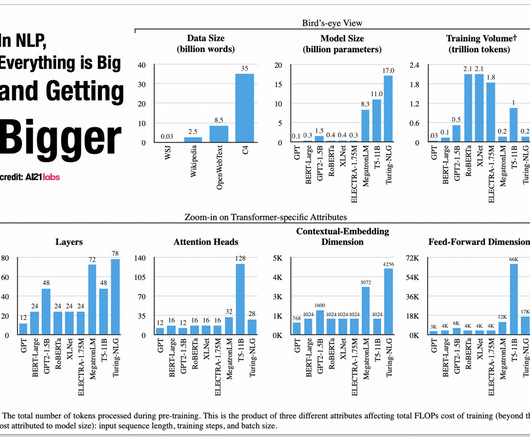

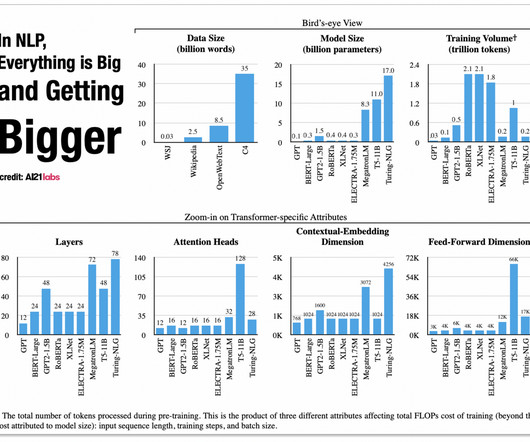

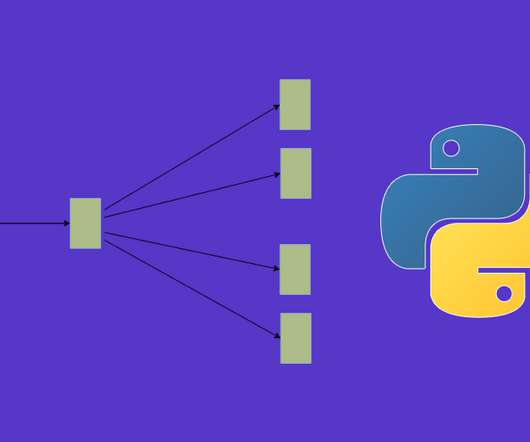

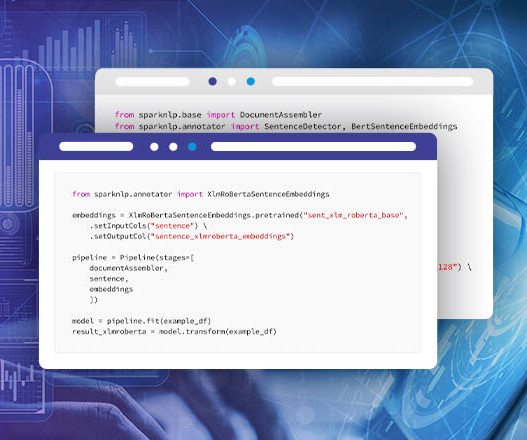

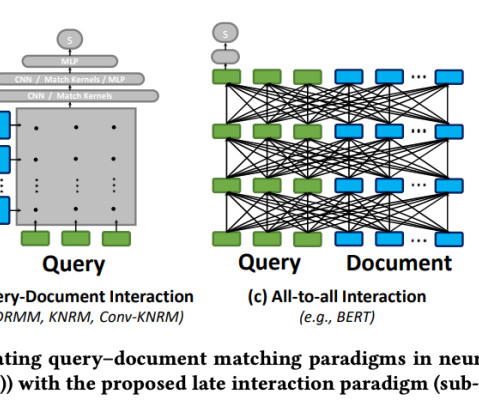

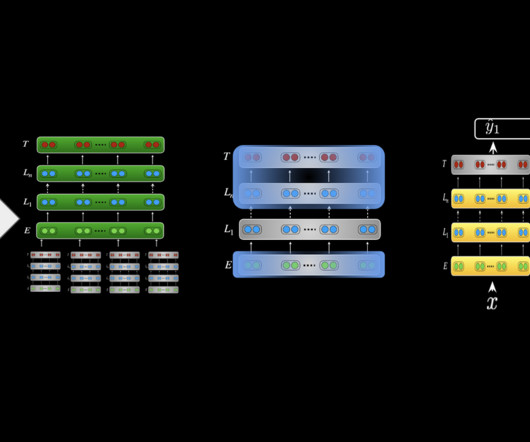

Dive into the world of NLP and learn how to analyze emotions in text with a few lines of code! That’s a bit like what BERT does — except instead of people, it reads text. BERT, short for Bidirectional Encoder Representations from Transformers, is a powerful machine learning model developed by Google.

Let's personalize your content