5G network rollout using DevOps: Myth or reality?

IBM Journey to AI blog

JUNE 12, 2023

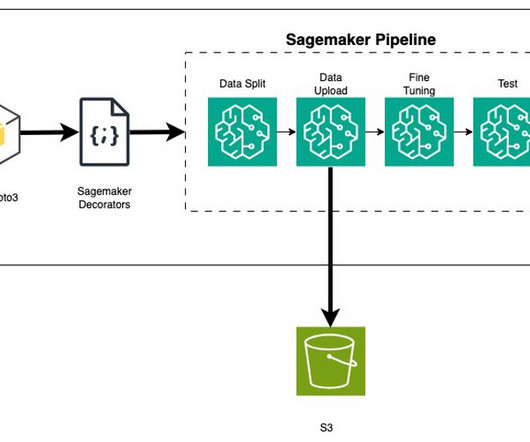

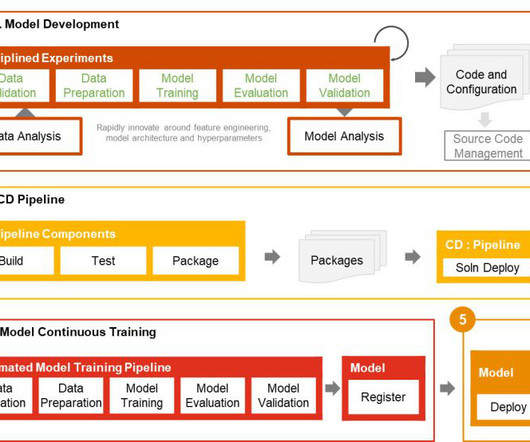

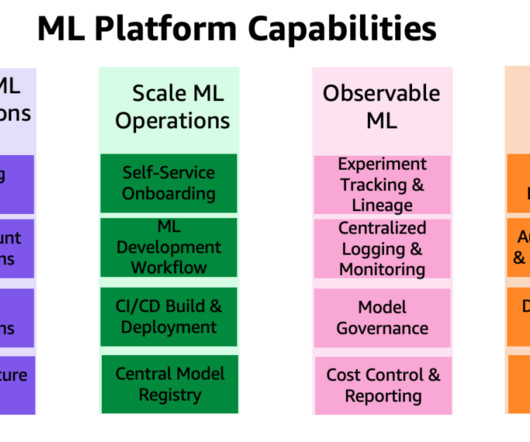

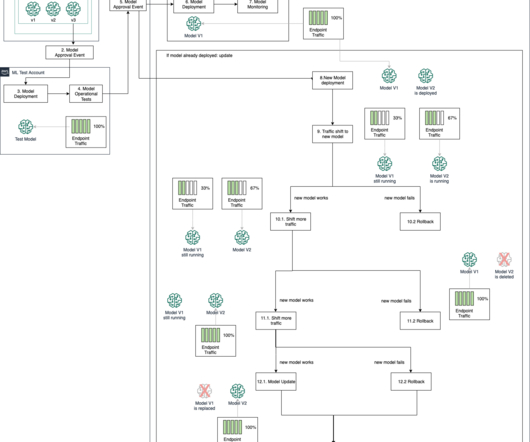

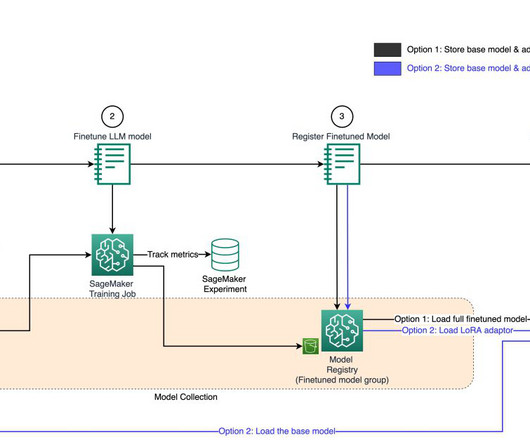

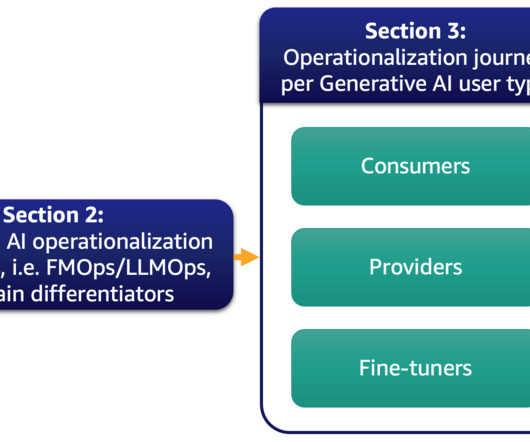

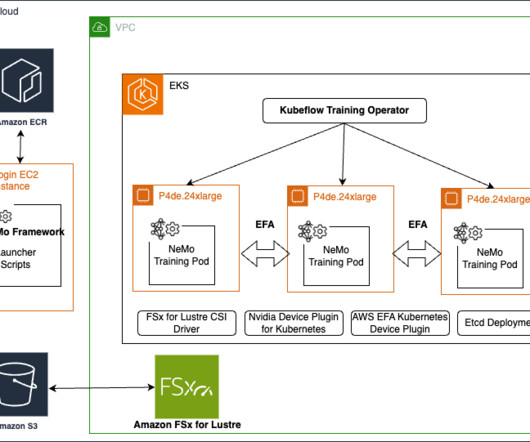

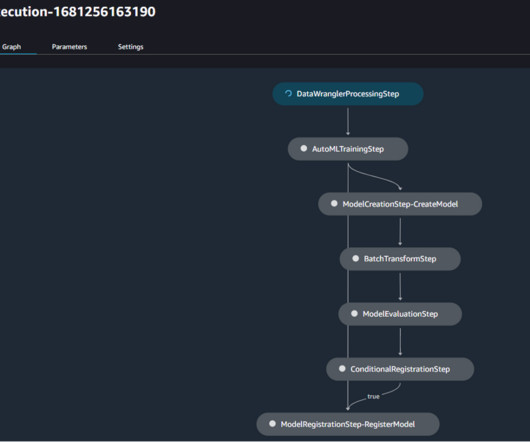

This requires a careful, segregated network deployment process into various “functional layers” of DevOps functionality that, when executed in the correct order, provides a complete automated deployment that aligns closely with the IT DevOps capabilities. that are required by the network function.

Let's personalize your content