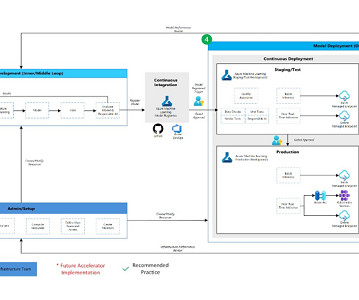

Machine Learning Operations (MLOPs) with Azure Machine Learning

ODSC - Open Data Science

JULY 19, 2023

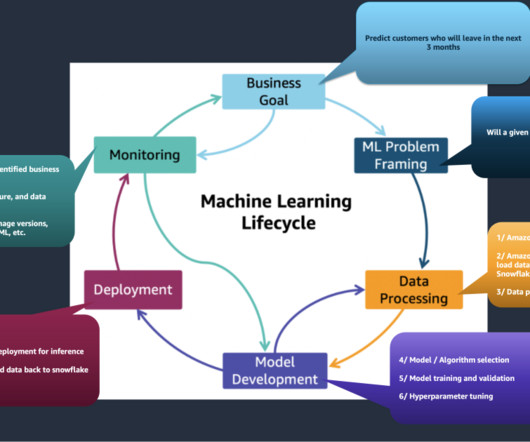

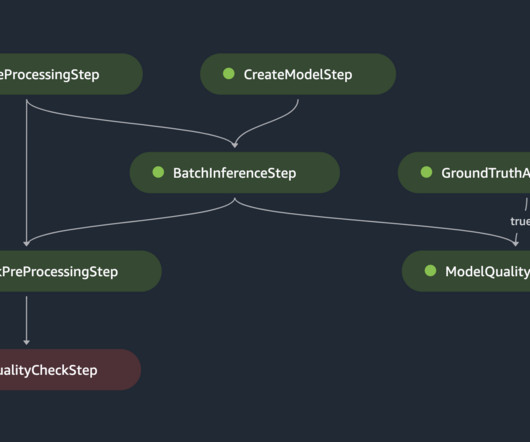

A well-implemented MLOps process not only expedites the transition from testing to production but also offers ownership, lineage, and historical data about ML artifacts used within the team. For the customer, this helps them reduce the time it takes to bootstrap a new data science project and get it to production. The typical score.py

Let's personalize your content