How Axfood enables accelerated machine learning throughout the organization using Amazon SageMaker

AWS Machine Learning Blog

FEBRUARY 27, 2024

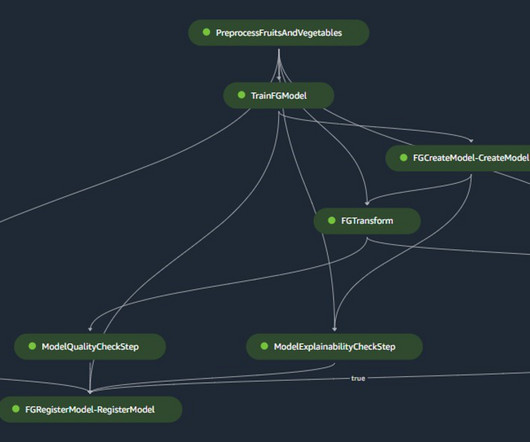

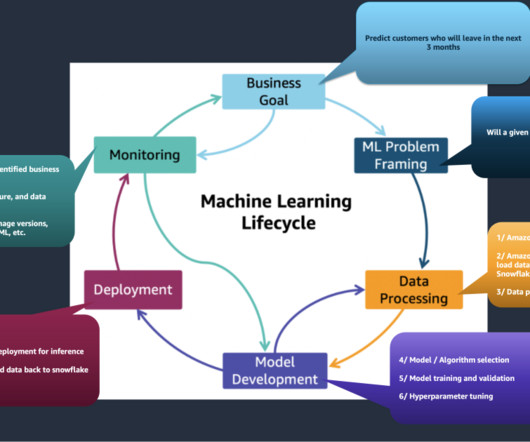

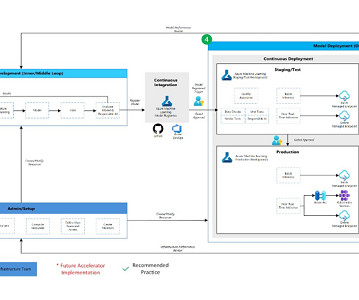

Automation of building new projects based on the template is streamlined through AWS Service Catalog , where a portfolio is created, serving as an abstraction for multiple products. The model will be approved by designated data scientists to deploy the model for use in production. Alerts are raised whenever anomalies are detected.

Let's personalize your content