Optimize your machine learning deployments with auto scaling on Amazon SageMaker

AWS Machine Learning Blog

FEBRUARY 8, 2023

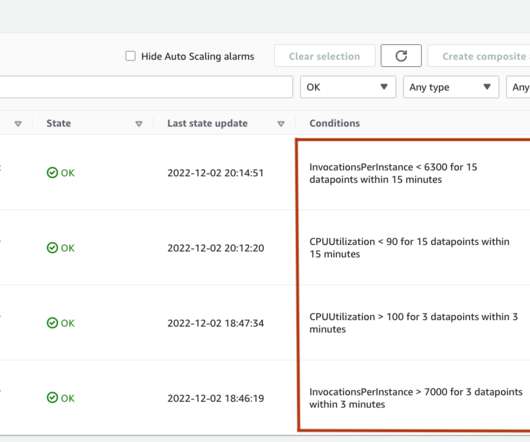

SageMaker supports automatic scaling (auto scaling) for your hosted models. Auto scaling dynamically adjusts the number of instances provisioned for a model in response to changes in your inference workload. When the workload increases, auto scaling brings more instances online.

Let's personalize your content