Create a document lake using large-scale text extraction from documents with Amazon Textract

AWS Machine Learning Blog

JANUARY 8, 2024

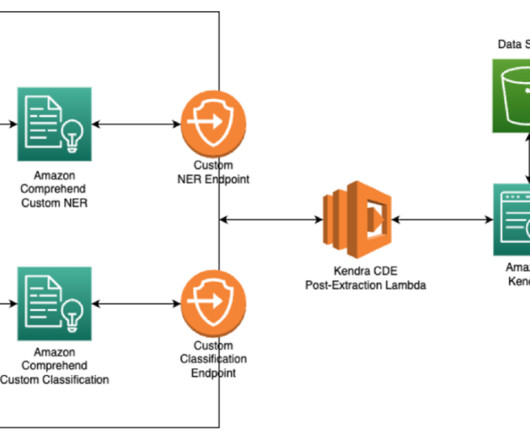

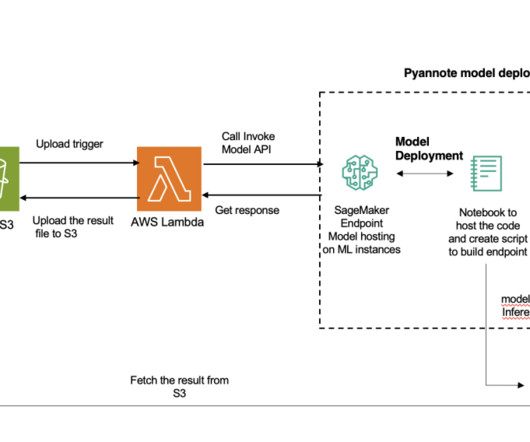

AWS customers in healthcare, financial services, the public sector, and other industries store billions of documents as images or PDFs in Amazon Simple Storage Service (Amazon S3). In this post, we focus on processing a large collection of documents into raw text files and storing them in Amazon S3.

Let's personalize your content