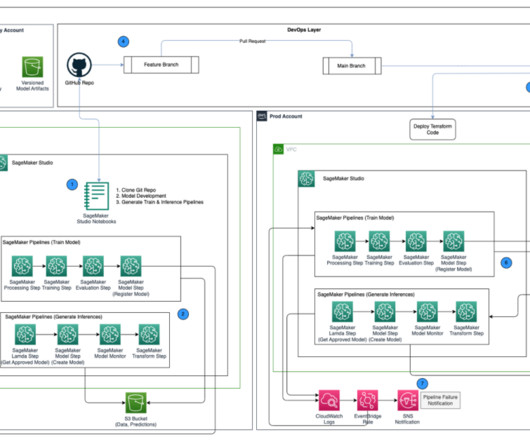

Deploy Amazon SageMaker pipelines using AWS Controllers for Kubernetes

AWS Machine Learning Blog

SEPTEMBER 4, 2024

Amazon SageMaker provides capabilities to remove the undifferentiated heavy lifting of building and deploying ML models. SageMaker simplifies the process of managing dependencies, container images, auto scaling, and monitoring. They often work with DevOps engineers to operate those pipelines. amazonaws.com/sagemaker-xgboost:1.7-1",

Let's personalize your content