Top LangChain Books to Read in 2024

Marktechpost

APRIL 16, 2024

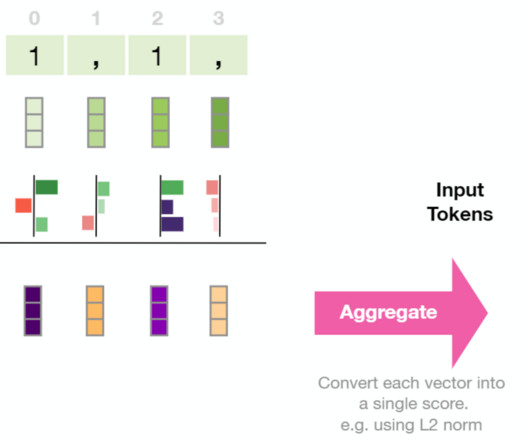

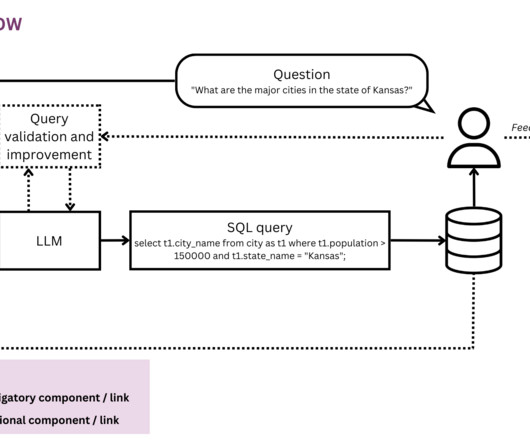

The book covers the inner workings of LLMs and provides sample codes for working with models like GPT-4, BERT, T5, LLaMA, etc. It explains the fundamentals of LLMs and generative AI and also covers prompt engineering to improve performance. The book covers topics like Auto-SQL, NER, RAG, Autonomous AI agents, and others.

Let's personalize your content