How Sportradar used the Deep Java Library to build production-scale ML platforms for increased performance and efficiency

AWS Machine Learning Blog

APRIL 19, 2023

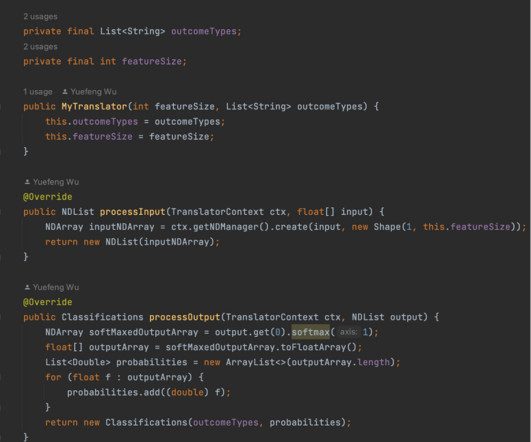

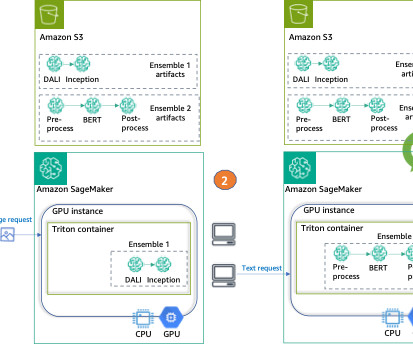

Then we needed to Dockerize the application, write a deployment YAML file, deploy the gRPC server to our Kubernetes cluster, and make sure it’s reliable and auto scalable. In our case, we chose to use a float[] as the input type and the built-in DJL classifications as the output type. There is also much upcoming with the DJL.

Let's personalize your content