How Sportradar used the Deep Java Library to build production-scale ML platforms for increased performance and efficiency

AWS Machine Learning Blog

APRIL 19, 2023

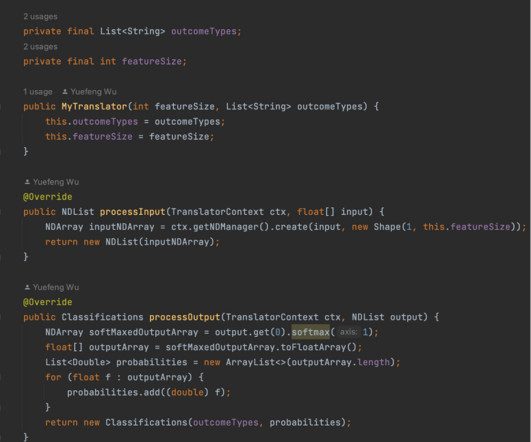

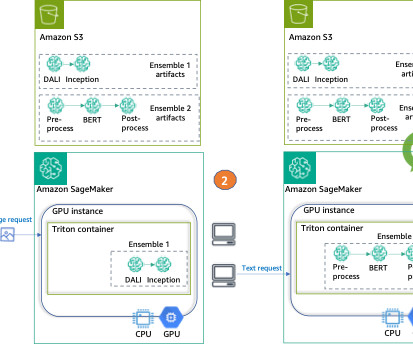

The DJL is a deep learning framework built from the ground up to support users of Java and JVM languages like Scala, Kotlin, and Clojure. With the DJL, integrating this deep learning is simple. In our case, we chose to use a float[] as the input type and the built-in DJL classifications as the output type.

Let's personalize your content