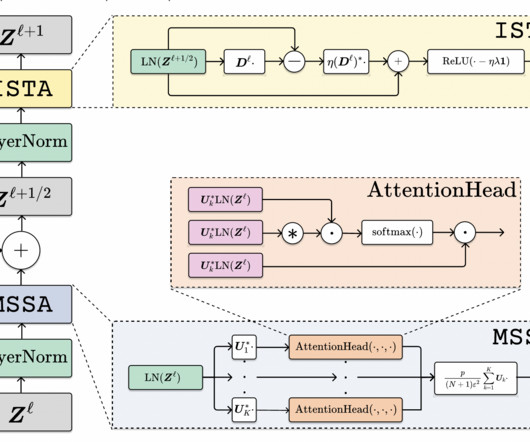

UC Berkeley Researchers Propose CRATE: A Novel White-Box Transformer for Efficient Data Compression and Sparsification in Deep Learning

Marktechpost

NOVEMBER 25, 2023

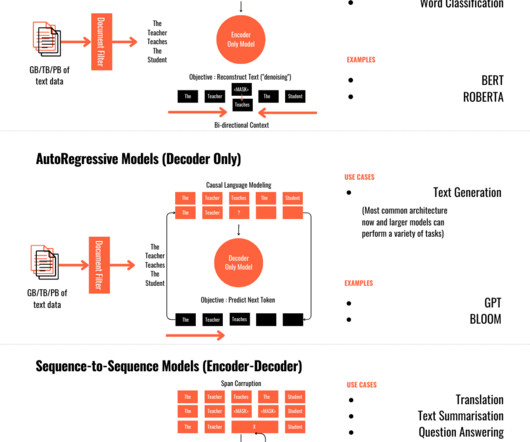

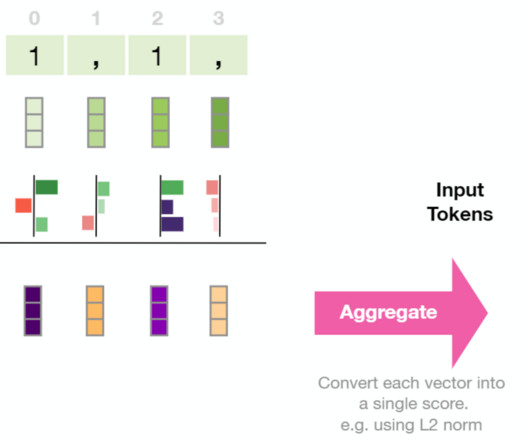

The practical success of deep learning in processing and modeling large amounts of high-dimensional and multi-modal data has grown exponentially in recent years. Such a representation makes many subsequent tasks, including those involving vision, classification, recognition and segmentation, and generation, easier.

Let's personalize your content