Re-imagining Glamour Photography with Generative AI

Mlearning.ai

JULY 3, 2023

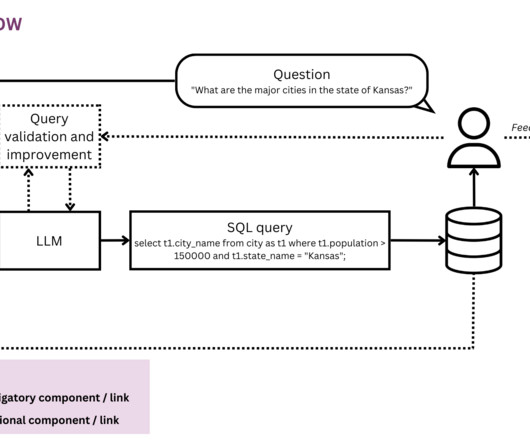

Life however decided to take me down a different path (partly thanks to Fujifilm discontinuing various films ), although I have never quite completely forgotten about glamour photography. Denoising Process Summary Text from a prompt is tokenized and encoded numerically. Image created by the author.

Let's personalize your content