Fantasy Football trades: How IBM Granite foundation models drive personalized explainability for millions

IBM Journey to AI blog

OCTOBER 15, 2024

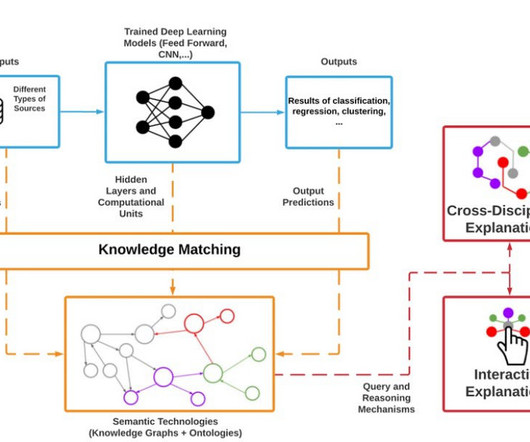

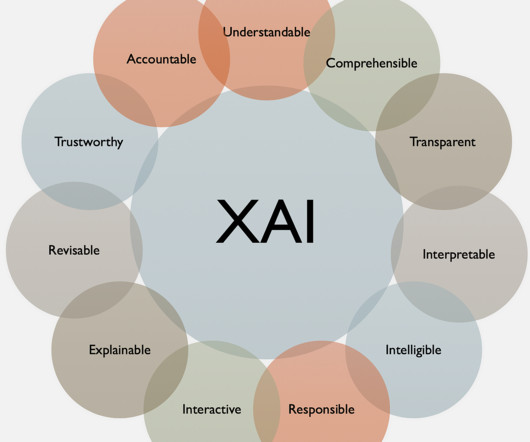

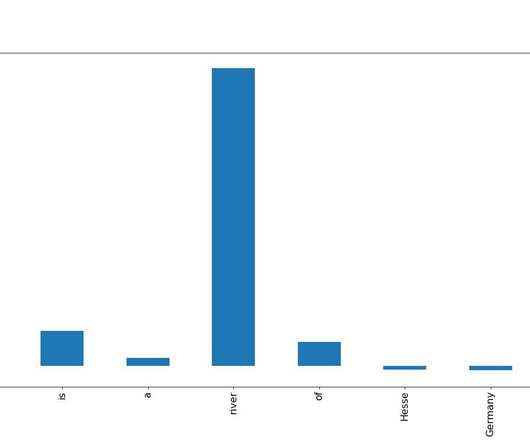

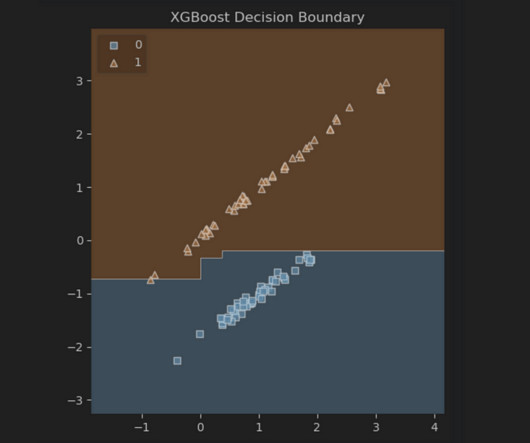

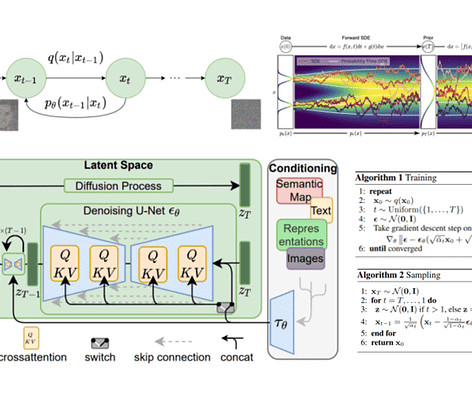

When a user taps on a player to acquire or trade, a list of “Top Contributing Factors” now appears alongside the numerical grade, providing team managers with personalized explainability in natural language generated by the IBM® Granite™ large language model (LLM). Why did it take so long? In a word: scale.

Let's personalize your content