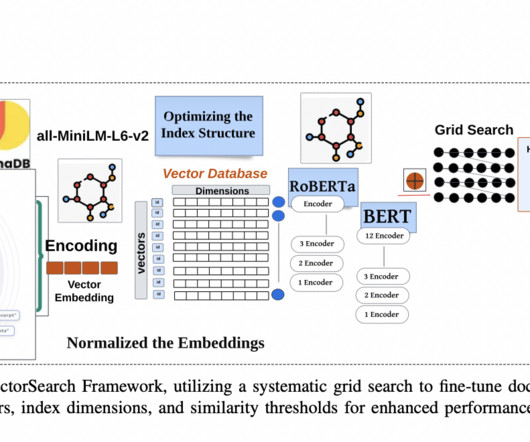

VectorSearch: A Comprehensive Solution to Document Retrieval Challenges with Hybrid Indexing, Multi-Vector Search, and Optimized Query Performance

Marktechpost

SEPTEMBER 30, 2024

Traditional algorithms and libraries often depend heavily on main memory storage and cannot distribute data across multiple machines, limiting their scalability. The framework incorporates cache mechanisms and optimized search algorithms, enhancing response times and overall performance. The system achieved an average query time of 0.47

Let's personalize your content