UltraFastBERT: Exponentially Faster Language Modeling

Unite.AI

DECEMBER 8, 2023

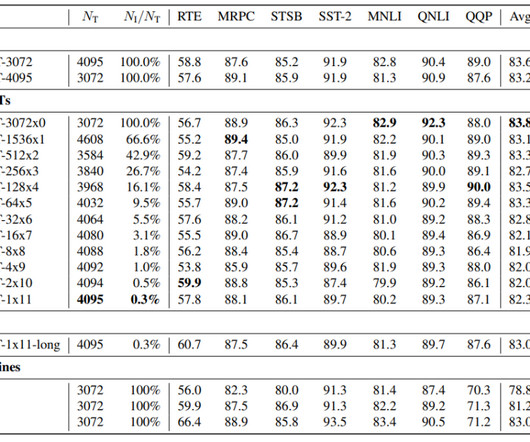

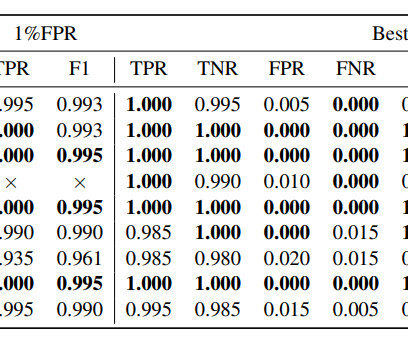

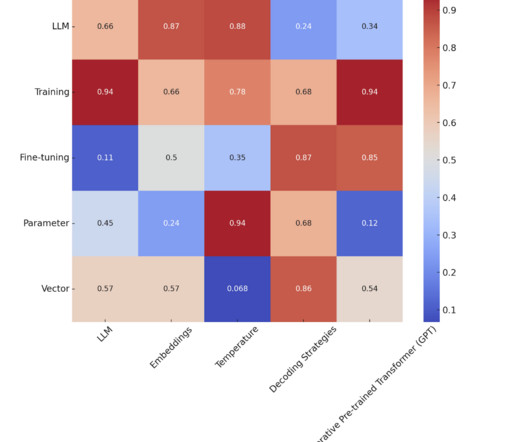

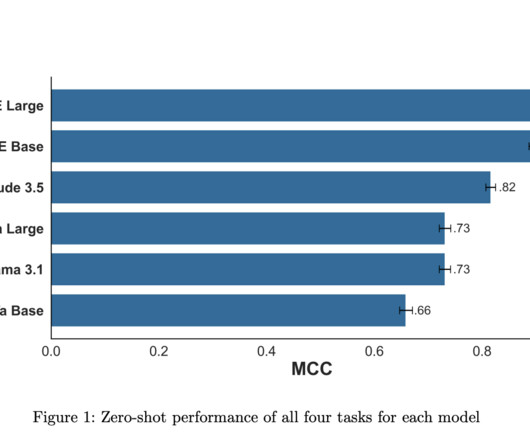

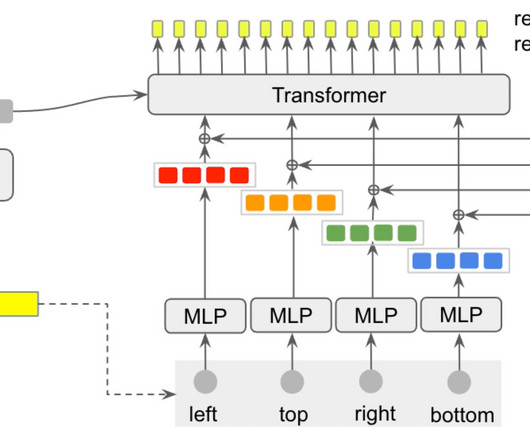

This article introduces UltraFastBERT, a BERT-based framework matching the efficacy of leading BERT models but using just 0.3% of the available neurons while delivering results comparable to BERT models with a similar size and training process, especially on the downstream tasks.

Let's personalize your content