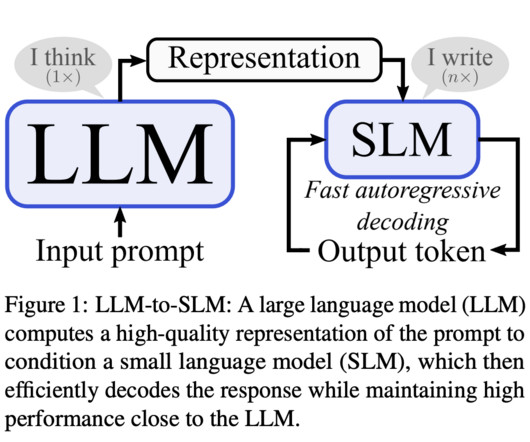

Enhancing Autoregressive Decoding Efficiency: A Machine Learning Approach by Qualcomm AI Research Using Hybrid Large and Small Language Models

Marktechpost

MARCH 3, 2024

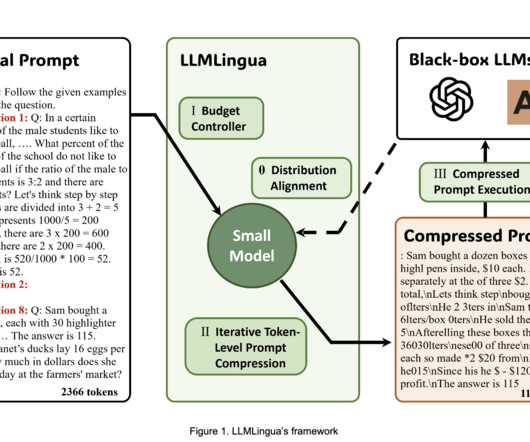

Central to Natural Language Processing (NLP) advancements are large language models (LLMs), which have set new benchmarks for what machines can achieve in understanding and generating human language. One of the primary challenges in NLP is the computational demand for autoregressive decoding in LLMs.

Let's personalize your content