MIT’s AI Agents Pioneer Interpretability in AI Research

Analytics Vidhya

JANUARY 6, 2024

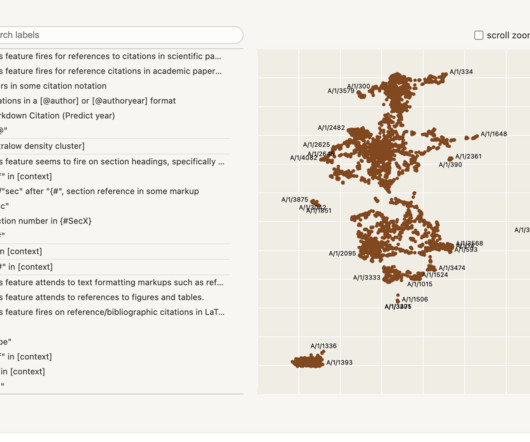

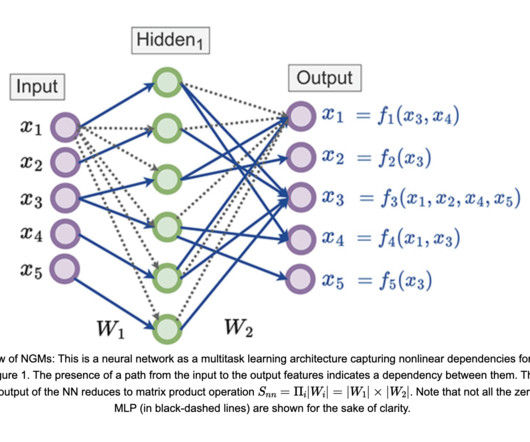

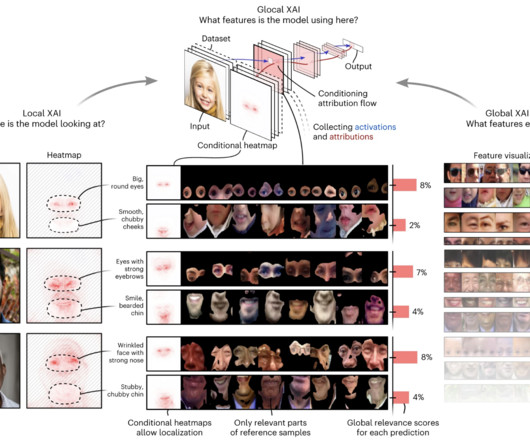

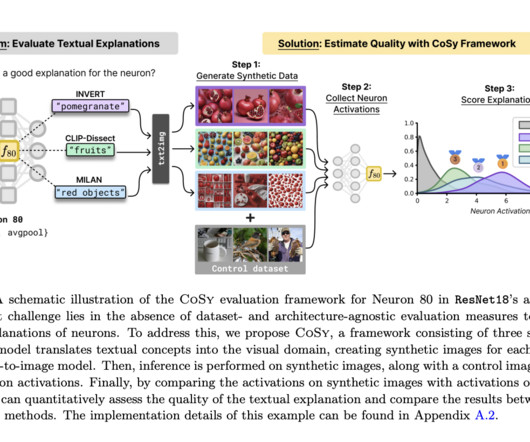

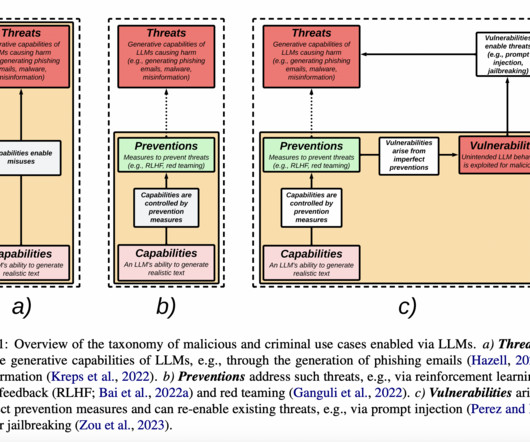

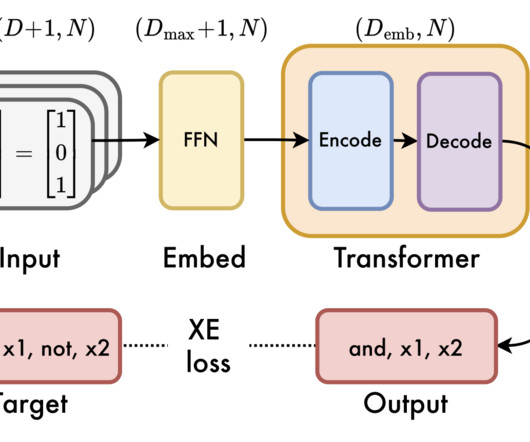

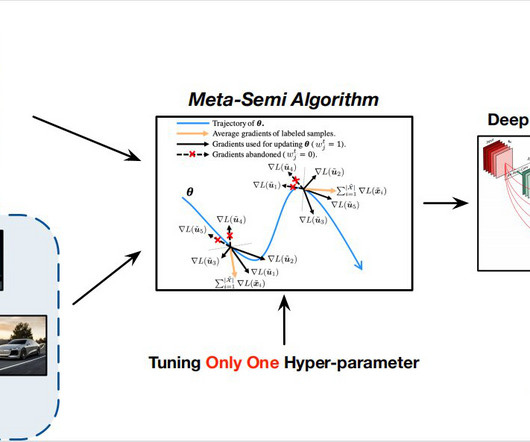

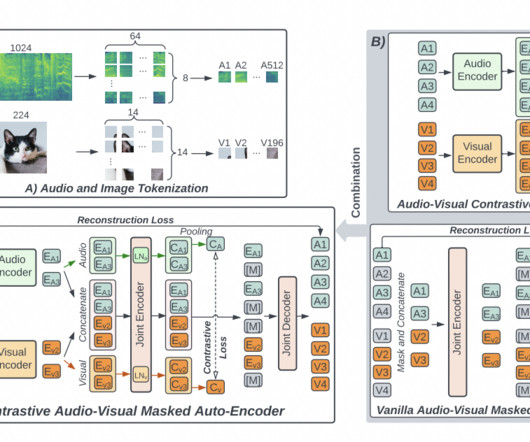

In a groundbreaking development, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have introduced a novel method leveraging artificial intelligence (AI) agents to automate the explanation of intricate neural networks.

Let's personalize your content