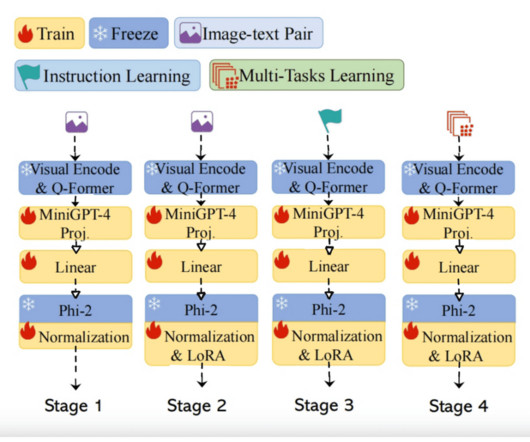

This AI Research Introduces TinyGPT-V: A Parameter-Efficient MLLMs (Multimodal Large Language Models) Tailored for a Range of Real-World Vision-Language Applications

Marktechpost

JANUARY 2, 2024

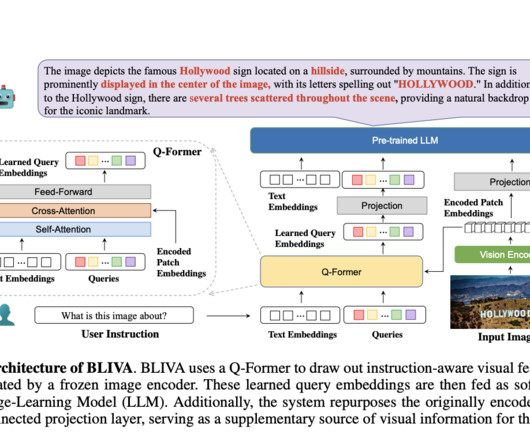

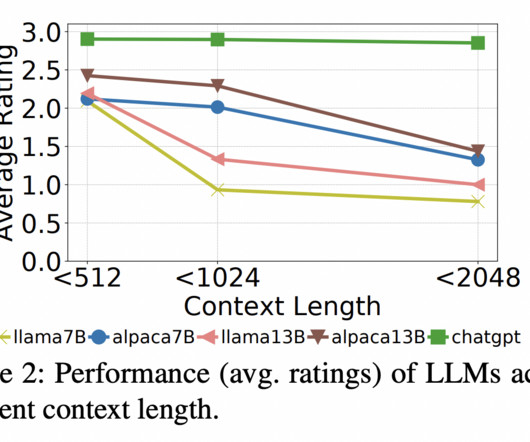

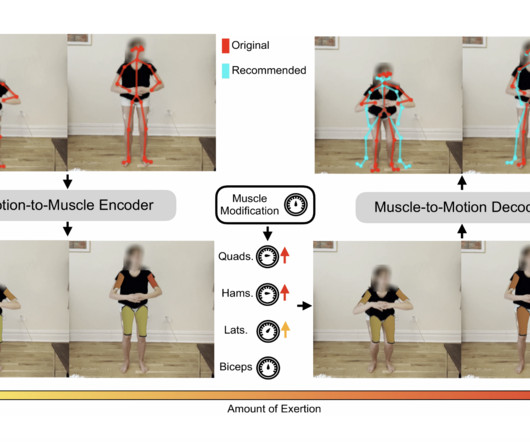

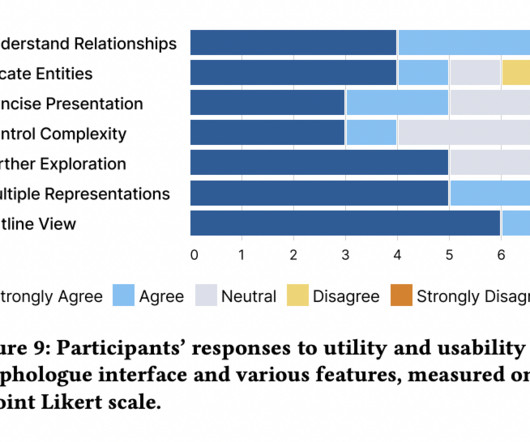

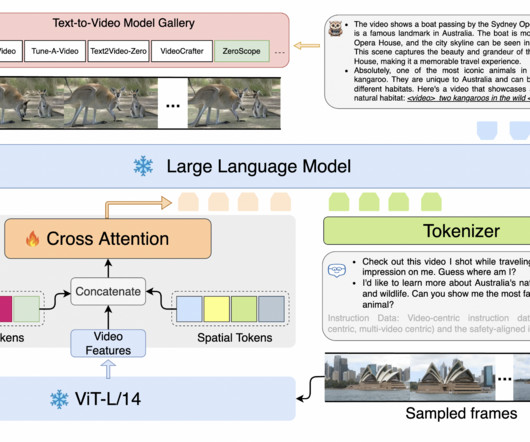

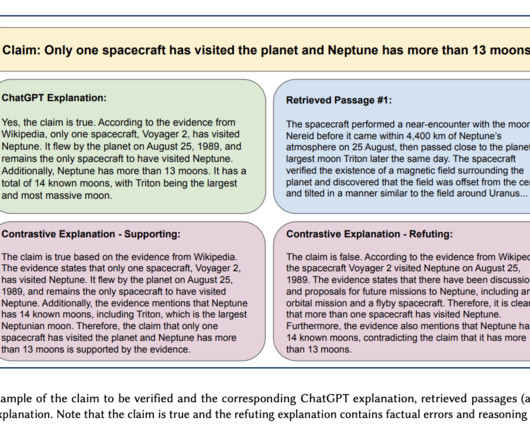

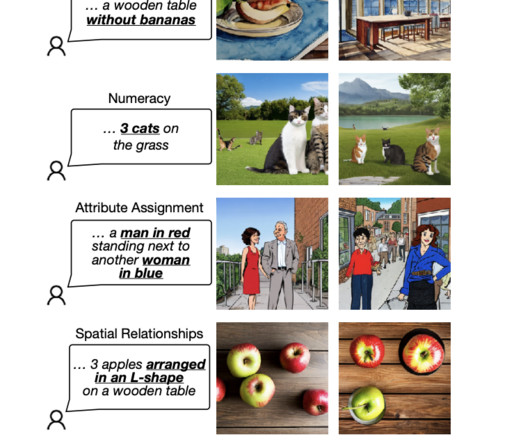

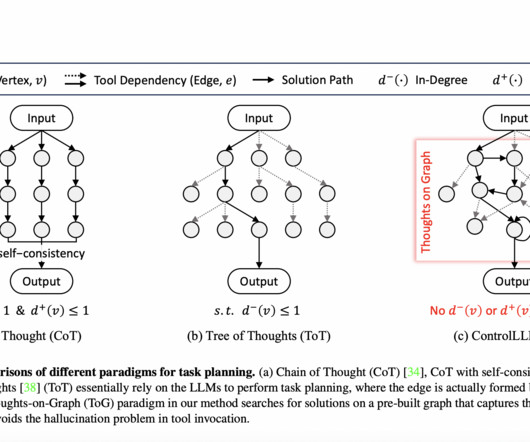

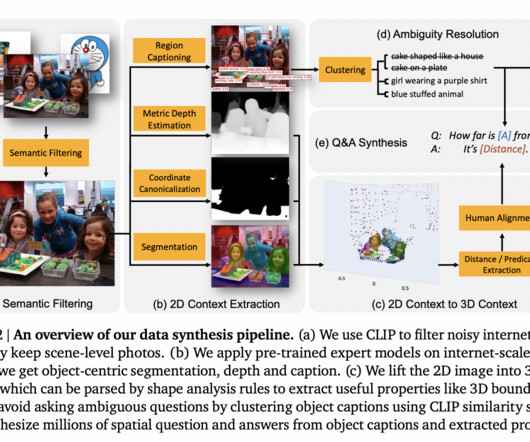

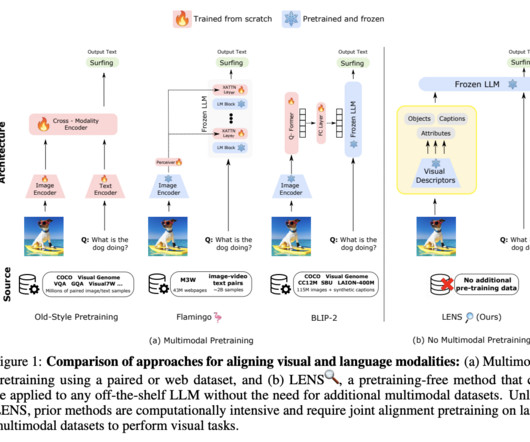

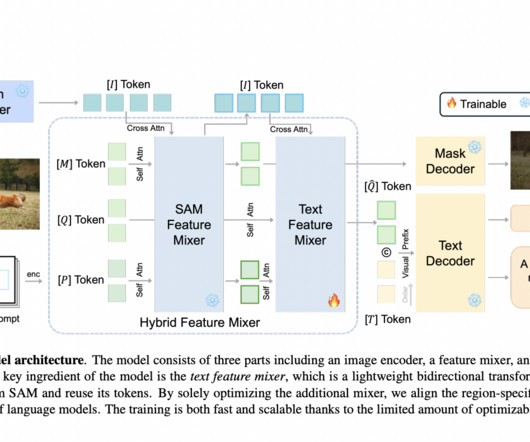

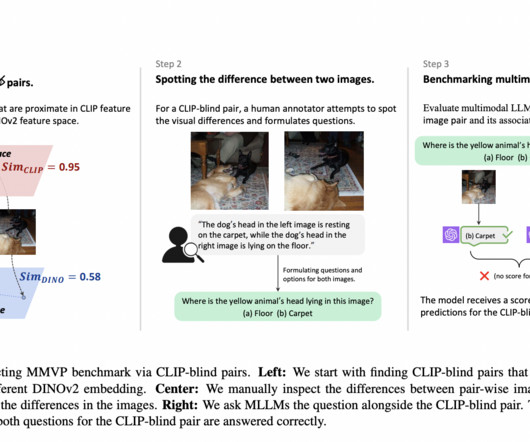

The development of multimodal large language models (MLLMs) represents a significant leap forward. These advanced systems, which integrate language and visual processing, have broad applications, from image captioning to visible question answering. If you like our work, you will love our newsletter.

Let's personalize your content