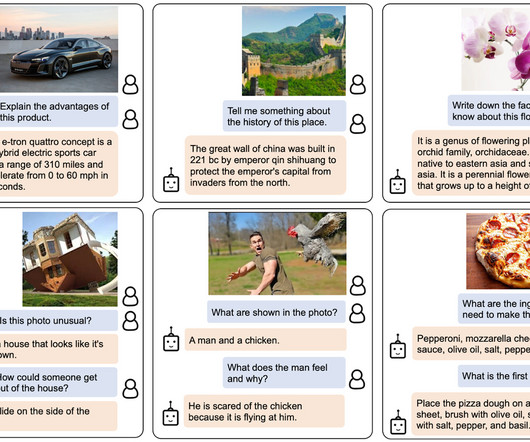

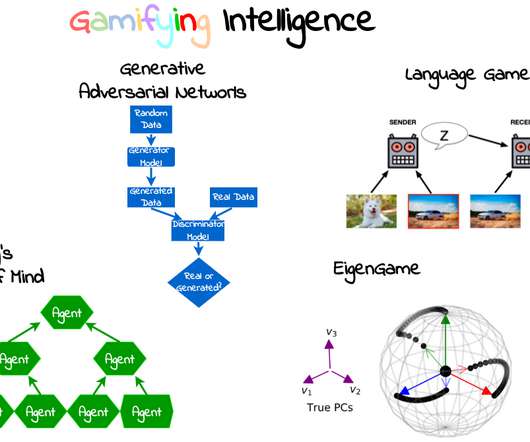

New Neural Model Enables AI-to-AI Linguistic Communication

Unite.AI

MARCH 24, 2024

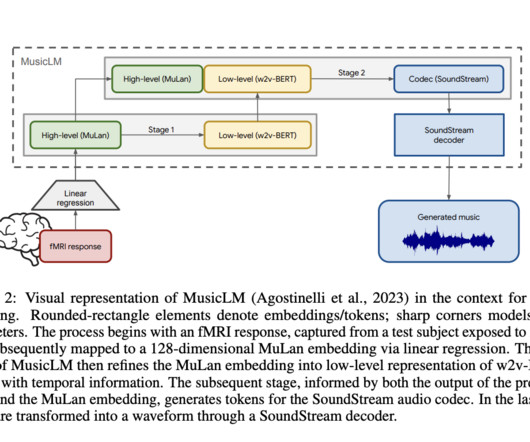

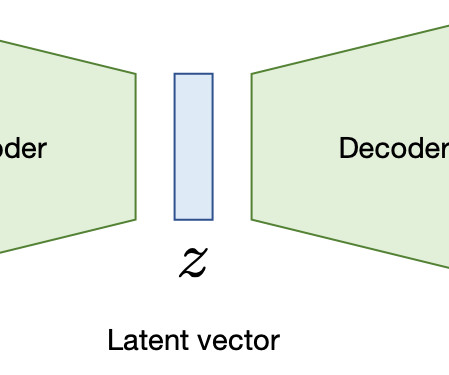

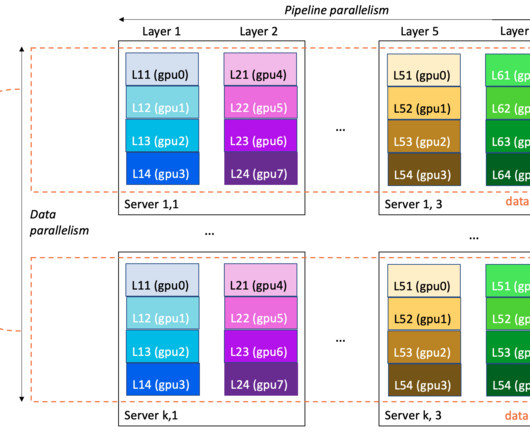

Central to this advancement in NLP is the development of artificial neural networks, which draw inspiration from the biological neurons in the human brain. These networks emulate the way human neurons transmit electrical signals, processing information through interconnected nodes.

Let's personalize your content