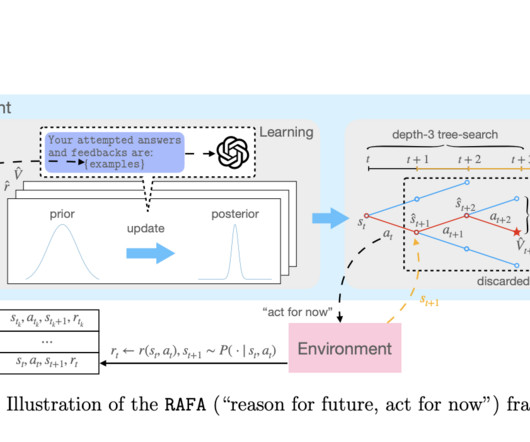

This AI Research Introduces ‘RAFA’: A Principled Artificial Intelligence Framework for Autonomous LLM Agents with Provable Sample Efficiency

Marktechpost

OCTOBER 24, 2023

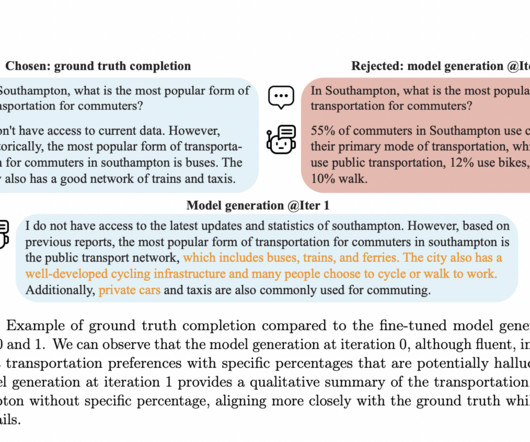

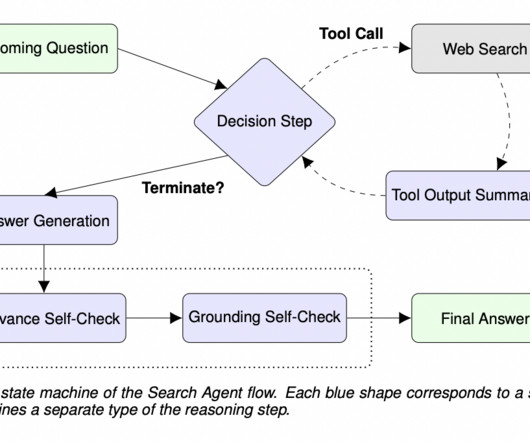

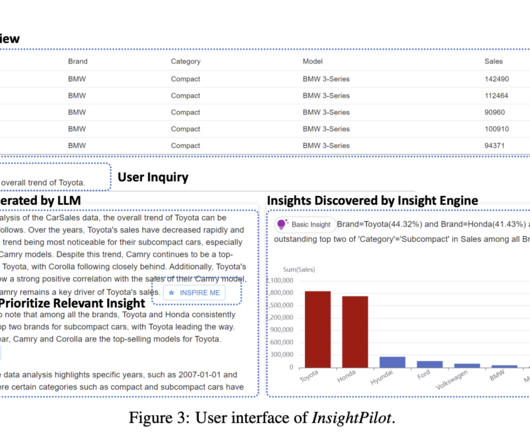

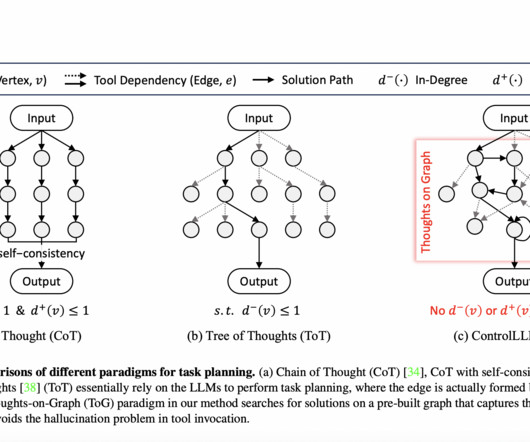

Within a Bayesian adaptive MDP paradigm, they formally describe how to reason and act with LLMs. Similarly, they instruct LLMs to learn a more accurate posterior distribution over the unknown environment by consulting the memory buffer and designing a series of actions that will maximize some value function. We are also on WhatsApp.

Let's personalize your content