Neural Networks Achieve Human-Like Language Generalization

Unite.AI

OCTOBER 25, 2023

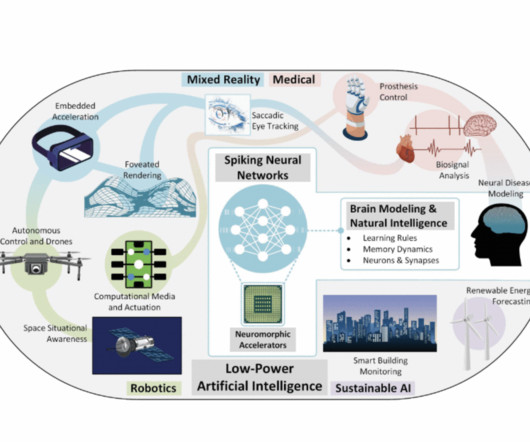

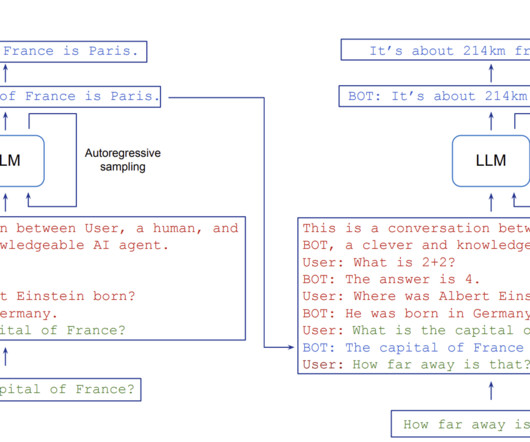

In the ever-evolving world of artificial intelligence (AI), scientists have recently heralded a significant milestone. They've crafted a neural network that exhibits a human-like proficiency in language generalization. ” Yet, this intrinsic human ability has been a challenging frontier for AI.

Let's personalize your content