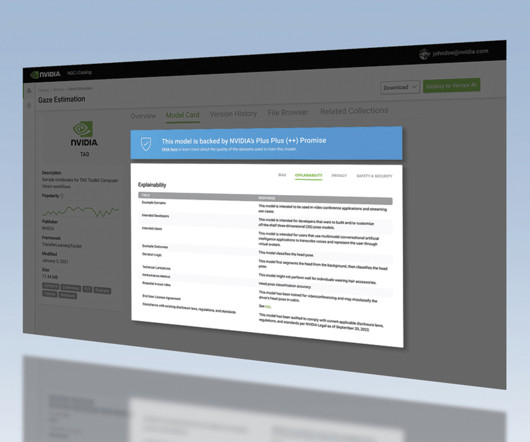

Generative AI in the Healthcare Industry Needs a Dose of Explainability

Unite.AI

SEPTEMBER 13, 2023

The remarkable speed at which text-based generative AI tools can complete high-level writing and communication tasks has struck a chord with companies and consumers alike. In this context, explainability refers to the ability to understand any given LLM’s logic pathways.

Let's personalize your content