Explainable AI: A Way To Explain How Your AI Model Works

Dlabs.ai

AUGUST 16, 2022

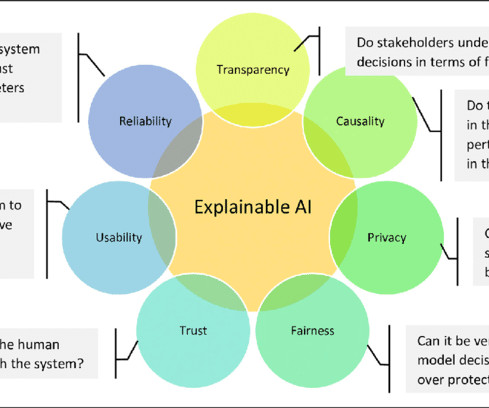

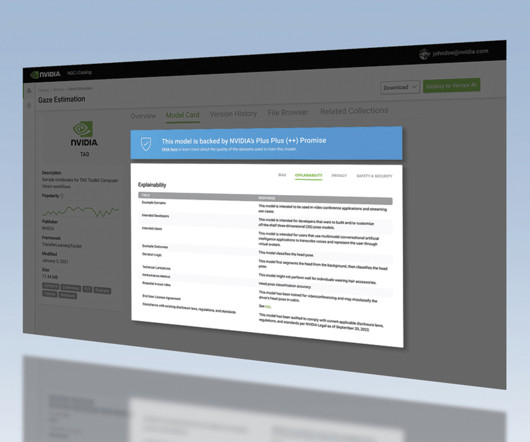

One of the major hurdles to AI adoption is that people struggle to understand how AI models work. This is the challenge that explainable AI solves. Explainable artificial intelligence shows how a model arrives at a conclusion. What is explainable AI? Let’s begin.

Let's personalize your content