Don’t pause AI development, prioritize ethics instead

IBM Journey to AI blog

MAY 2, 2023

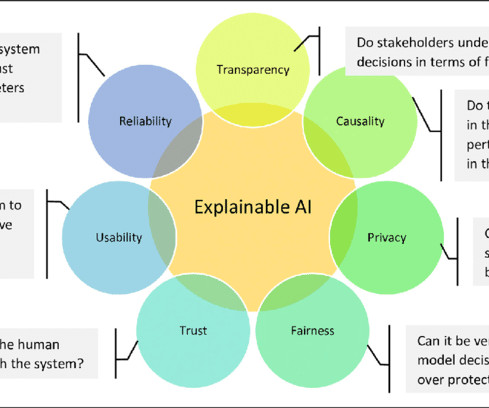

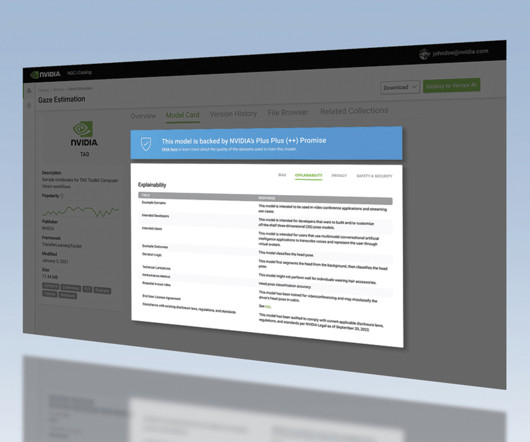

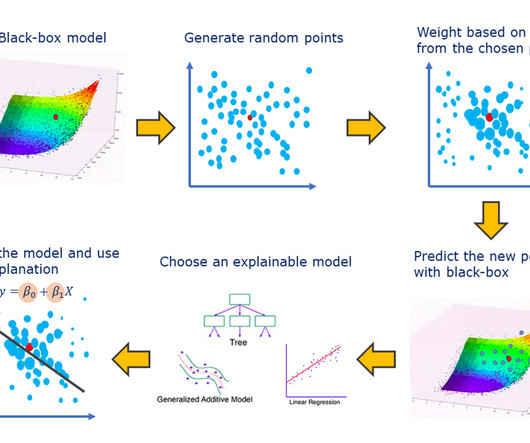

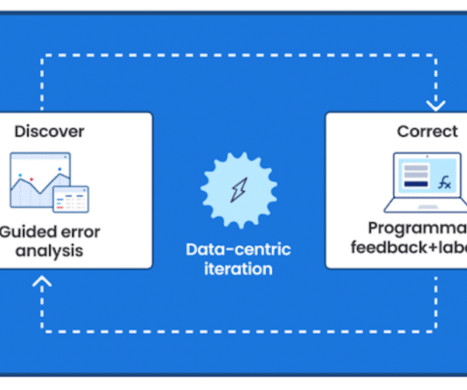

.” That is why IBM developed a governance platform that monitors models for fairness and bias, captures the origins of data used, and can ultimately provide a more transparent, explainable and reliable AI management process. The stakes are simply too high, and our society deserves nothing less.

Let's personalize your content