Ovis-1.6: An Open-Source Multimodal Large Language Model (MLLM) Architecture Designed to Structurally Align Visual and Textual Embeddings

Marktechpost

SEPTEMBER 29, 2024

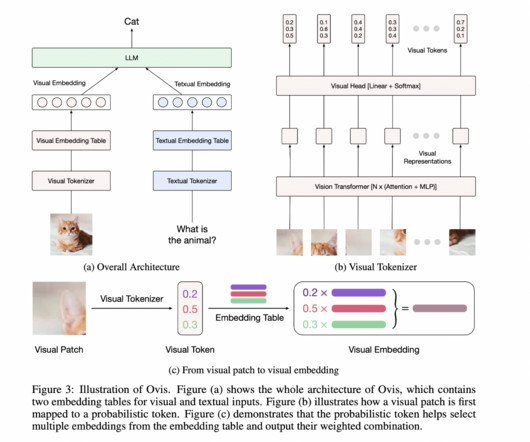

is a new multimodal large language model (MLLM) that structurally aligns visual and textual embeddings to address this challenge. Similarly, in the RealWorldQA benchmark, Ovis outperformed leading proprietary models such as GPT4V and Qwen-VL-Plus, scoring 2230, compared to GPT4V’s 2038. Click here to set up a call!

Let's personalize your content