Origins of Generative AI and Natural Language Processing with ChatGPT

ODSC - Open Data Science

JUNE 9, 2023

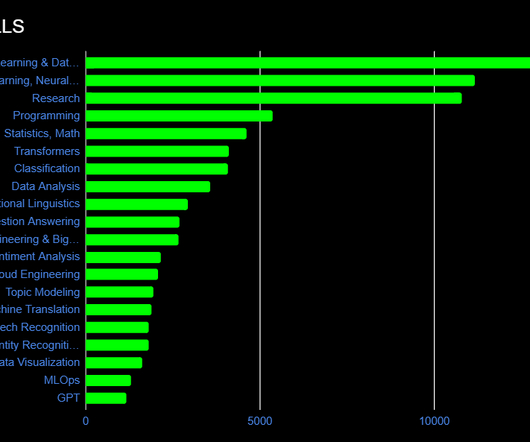

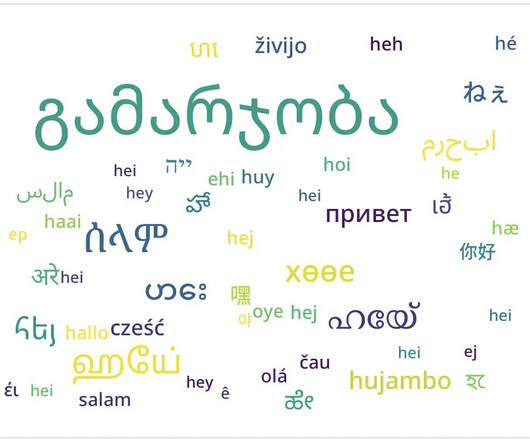

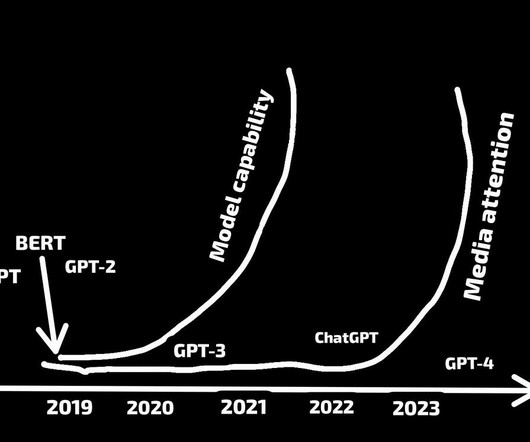

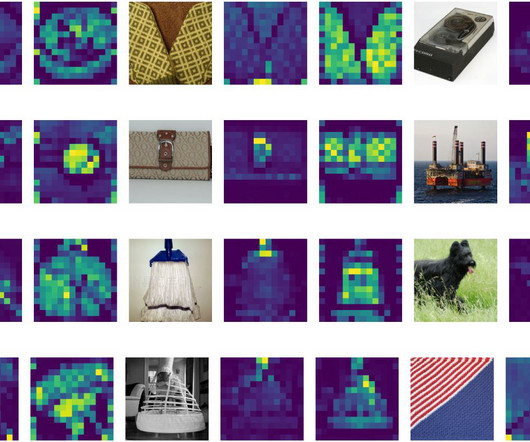

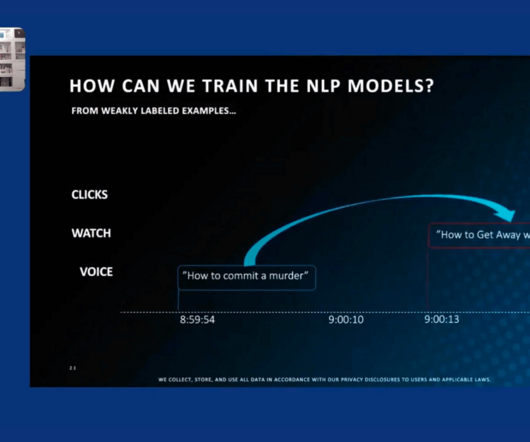

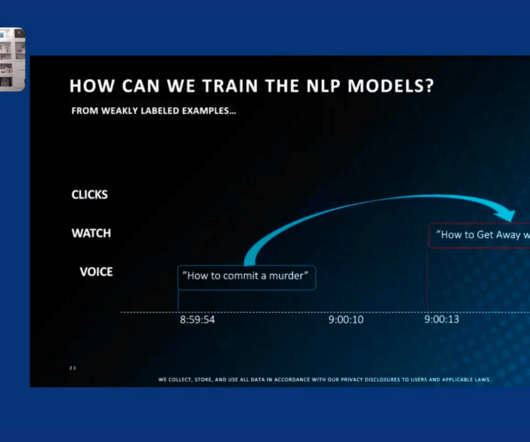

Once a set of word vectors has been learned, they can be used in various natural language processing (NLP) tasks such as text classification, language translation, and question answering. This allows BERT to learn a deeper sense of the context in which words appear. or ChatGPT (2022) ChatGPT is also known as GPT-3.5

Let's personalize your content