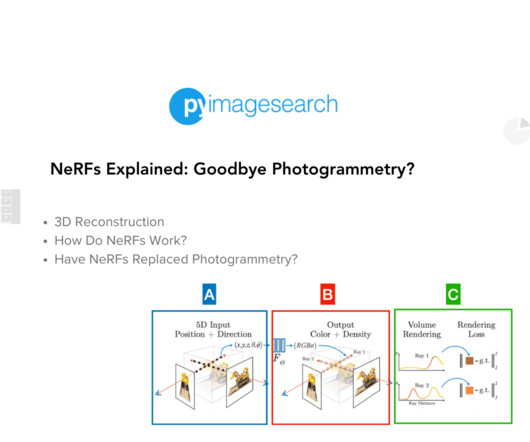

NeRFs Explained: Goodbye Photogrammetry?

PyImageSearch

OCTOBER 28, 2024

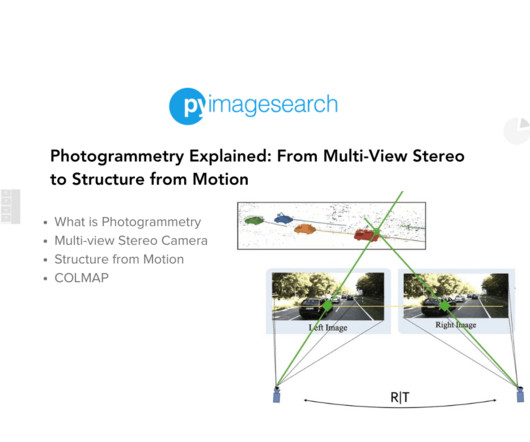

Home Table of Contents NeRFs Explained: Goodbye Photogrammetry? Block #A: We Begin with a 5D Input Block #B: The Neural Network and Its Output Block #C: Volumetric Rendering The NeRF Problem and Evolutions Summary and Next Steps Next Steps Citation Information NeRFs Explained: Goodbye Photogrammetry? How Do NeRFs Work?

Let's personalize your content